- What is container orchestration?

- Kubernetes architecture

- Kubernetes resources

- Pods

- ReplicaSets

- Deployments

- Services

- Deploy an application to Kubernetes

- Scale the application

- Access the application using Kubernetes Services

What do I need to start with Kubernetes?

- Docker Desktop

- Access to a Kubernetes cluster. See "Which Kubernetes cluster should I use"

- Kubernetes CLI (

kubectl)

Which Kubernetes cluster should I use?

localhost. You can access these services using both kind and Minikube as well, however, it requires you to run additional commands.Kubernetes and contexts

kubectl and it allows you to run commands against your cluster. To make sure everything is working correctly, you can run kubectl get nodes to list all nodes in the Kubernetes cluster. You should get an output similar to this one:$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

docker-desktop Ready master 63d v1.16.6-beta.0

docker-desktop. Kubernetes uses a configuration file called config to find the information it needs to connect to the cluster. This file is located in your home folder - for example $HOME/.kube/config. Context is an element inside that config file and it contains a reference to the cluster, namespace, and the user. If you're accessing or running a single cluster you will only have one context in the config file. However, you can have multiple contexts defined that point to different clusters.kubectl config command you can view these contexts and switch between them. You can run the current-context command to view the current context:$ kubectl config current-context

docker-desktop

use-context command to switch to the docker-desktop context:$ kubectl config use-context docker-desktop

Switched to context "docker-desktop".

kubectl config get-contexts to get the list of all Kubernetes contexts. The currently selected context will be indicated with * in the CURRENT column:$ kubectl config get-contexts

CURRENT NAME CLUSTER AUTHINFO NAMESPACE

docker-desktop docker-desktop docker-desktop

* minikube minikube minikube

peterjk8s peterjk8s clusterUser_mykubecluster_peterjk8s

use-context, set-context, get-contexts, and so on. I prefer to use a tool called kubectx. This tool allows you to quickly switch between different Kubernetes contexts. For example, if I have three clusters (contexts) set in the config file, running kubectx outputs this:$ kubectx

docker-desktop

peterj-cluster

minikube

minikube context, I can run: kubectx minikube.kubectl would be kubectl config get-contexts to view all contexts, and kubectl config use-context minikube to switch the context.docker-desktop if you're using Docker for Mac/Windows.

What is container orchestration?

- Provision and deploy containers based on available resources

- Perform health monitoring on containers

- Load balancing and service discovery

- Allocate resources between different containers

- Scaling the containers up and down

Note

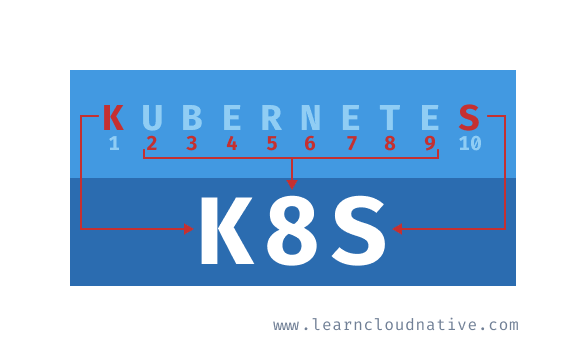

Frequently, you will see Kubernetes being referred to as "K8S". K8S is a numeronym for Kubernetes. The first (K) and the last letter (S) are first and the last letters in the word Kubernetes, and 8 is the number of characters between those two letters. Other popular numeronyms are "i18n" for internationalization or "a11y" for accessibility.

Kubernetes vs. Docker?

Kubernetes vs. Docker Swarm?

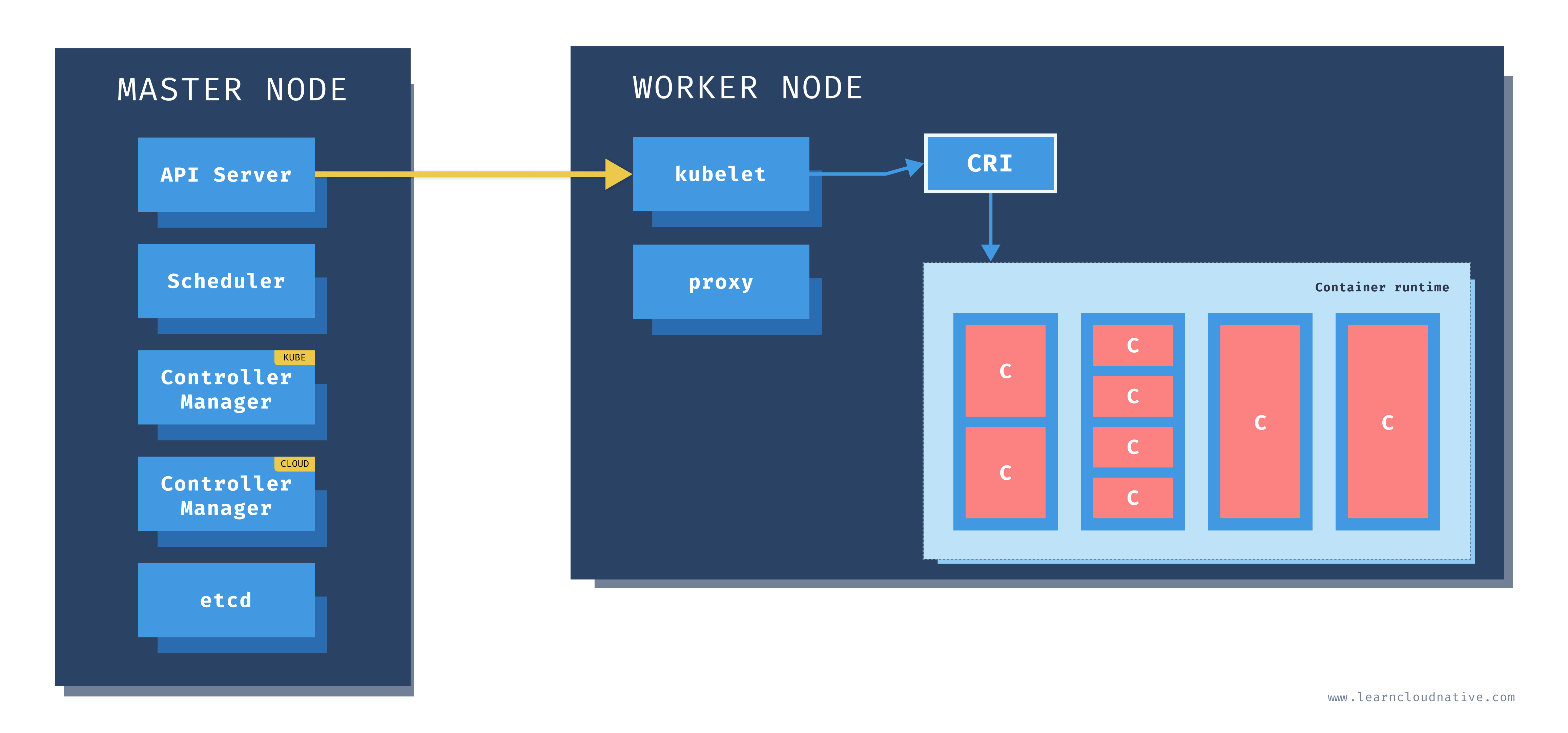

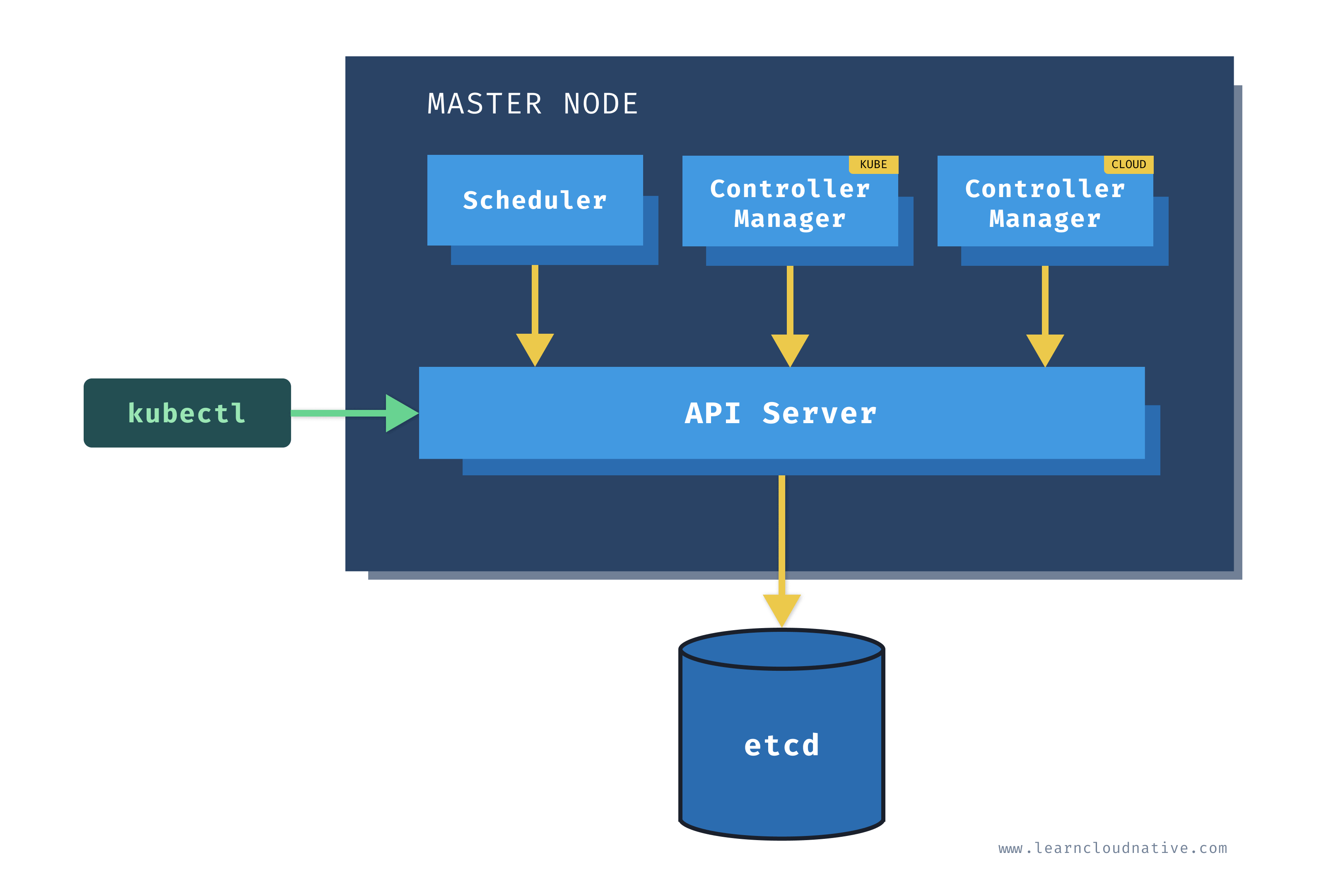

Kubernetes architecture

- Master node(s): this node hosts the Kubernetes control plane and manages the cluster

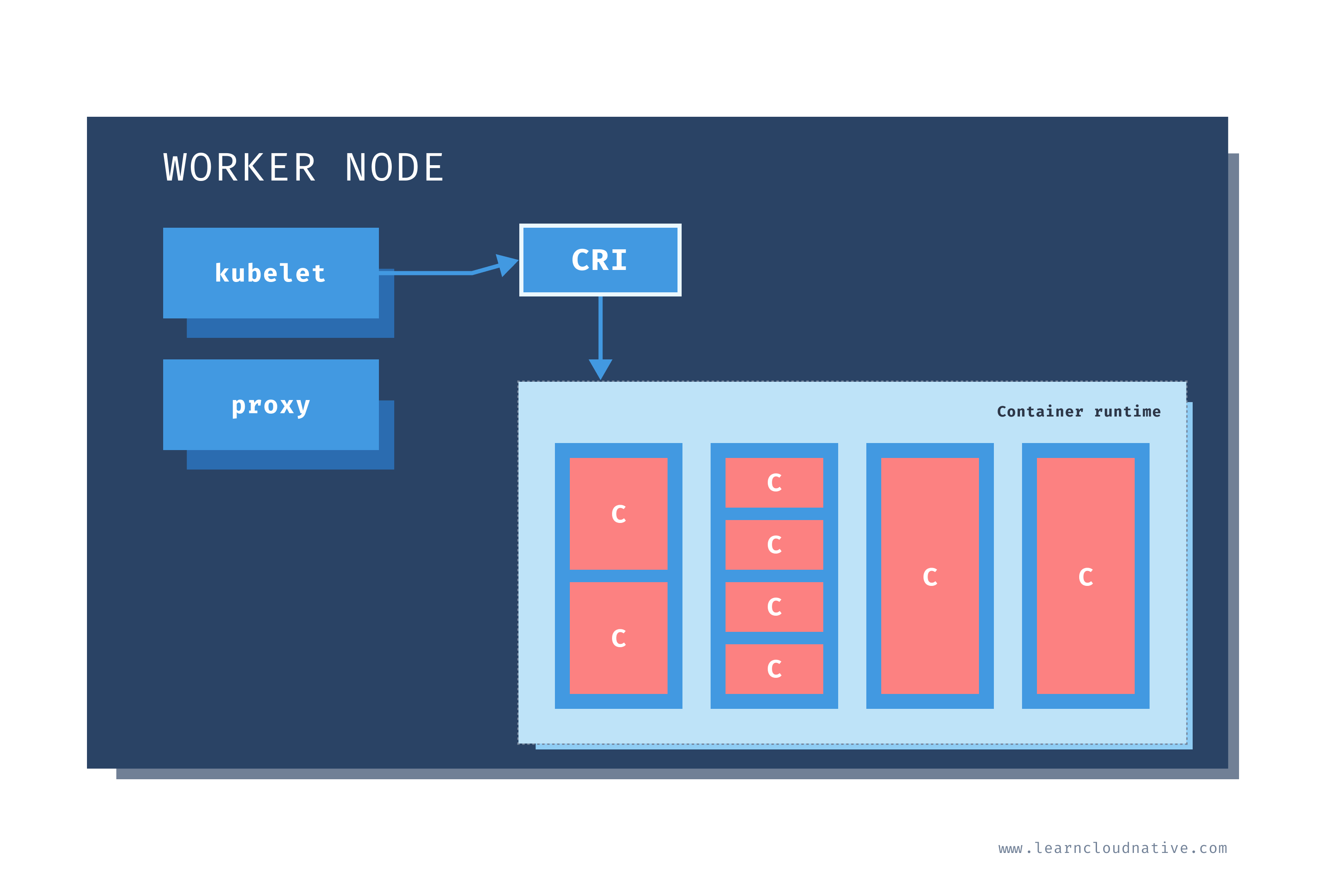

- Worker node(s): runs your containerized applications

Master node

kubectl) talks to when you're creating Kubernetes resources or managing the cluster.

Worker node

Kubernetes resources

kubectl api-resources command. It will list all defined resources - there will be a lot of them.apiVersion and kind fields to describe which version of the Kubernetes API you're using when creating the resource (for example, apps/v1) and what kind of a resource you are creating (for example, Deployment, Pod, Service, etc.).metadata includes the data that can help to identify the resource you are creating. This usually includes a name (for example mydeployment) and the namespace where the resource will be created. There are also other fields that you can provide in the metadata, such as labels and annotations, and a couple of them that get added after you created the resource (such as creationTimestamp for example).Pods

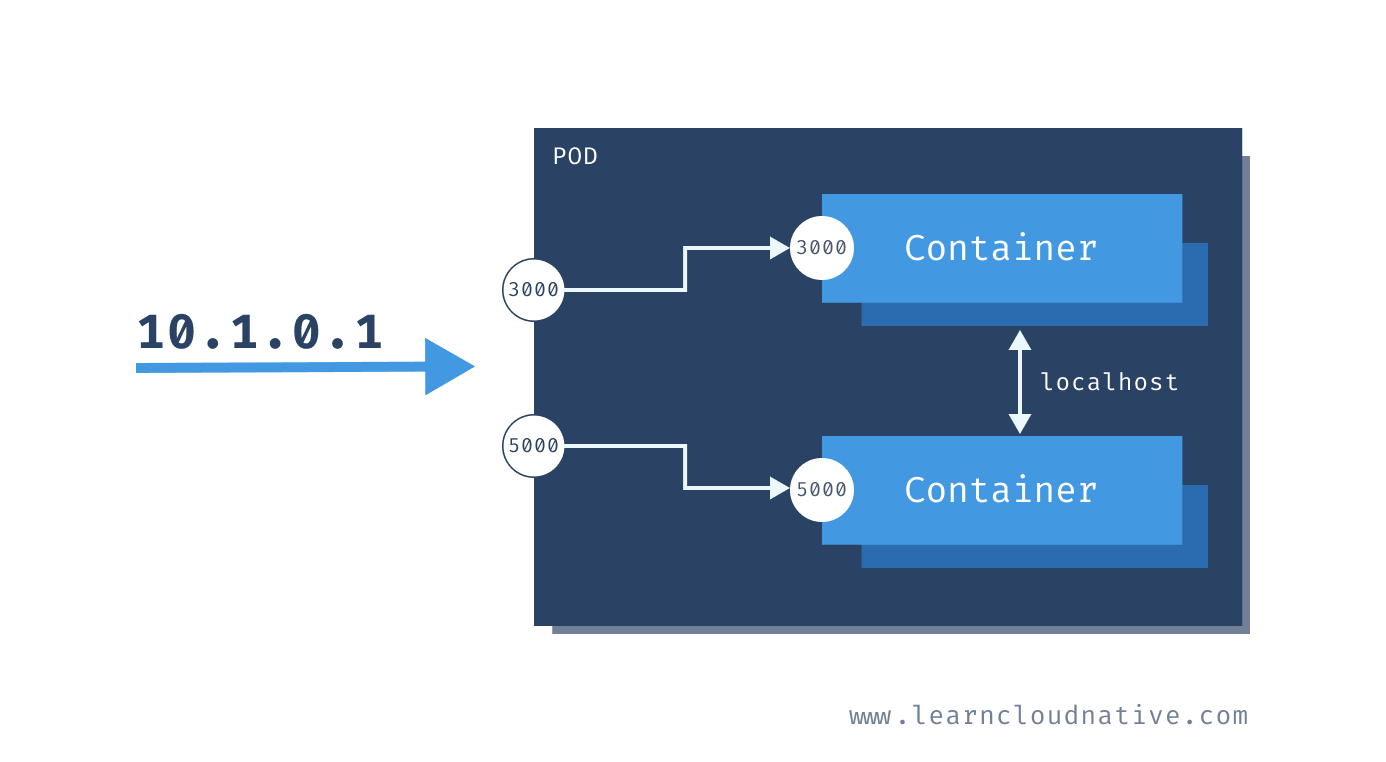

localhost.

curl 10.1.0.1:3000 to communicate to the one container and curl 10.1.0.1:5000 to communicate to the other container. However, if you wanted to talk between containers - for example, calling the top container from the bottom one, you could use http://localhost:3000.Scaling Pods

Note

"Awesome! I can run my application and a database in the same pod!!" No, do not do that!

Creating Pods

apiVersion: v1

kind: Pod

metadata:

name: hello-pod

labels:

app: hello

spec:

containers:

- name: hello-container

image: busybox

command: ['sh', '-c', 'echo Hello from my container! && sleep 3600']

Pod) and the metadata. The metadata includes the name of our pod (hello-pod) and a set of labels that are simple key-value pairs (app=hello).spec section we are describing how the pod should look like. We will have a single container inside this pod, called hello-container, and it will run the image called busybox. When the container has started, the command defined in the command field will be executed.pod.yaml for example. Then, you can use Kubernetes CLI (kubectl) to create the pod:$ kubectl create -f pod.yaml

pod/hello-pod created

kubectl get pods to get a list of all Pods running the default namespace of the cluster.$ kubectl get pods

NAME READY STATUS RESTARTS AGE

hello-pod 1/1 Running 0 7s

logs command to see the output from the container running inside the Pod:$ kubectl logs hello-pod

Hello from my container!

Note

In case you'd have multiple containers running inside the same Pod, you could use the-cflag to specify the container name you want to get the logs from. For example:kubectl logs hello-pod -c hello-container

kubectl delete pod hello-pod, the Pod will be gone forever. There's nothing that would automatically restart the Pod. If you run the kubectl get pods again, you notice the Pod is gone:$ kubectl get pods

No resources found in default namespace.

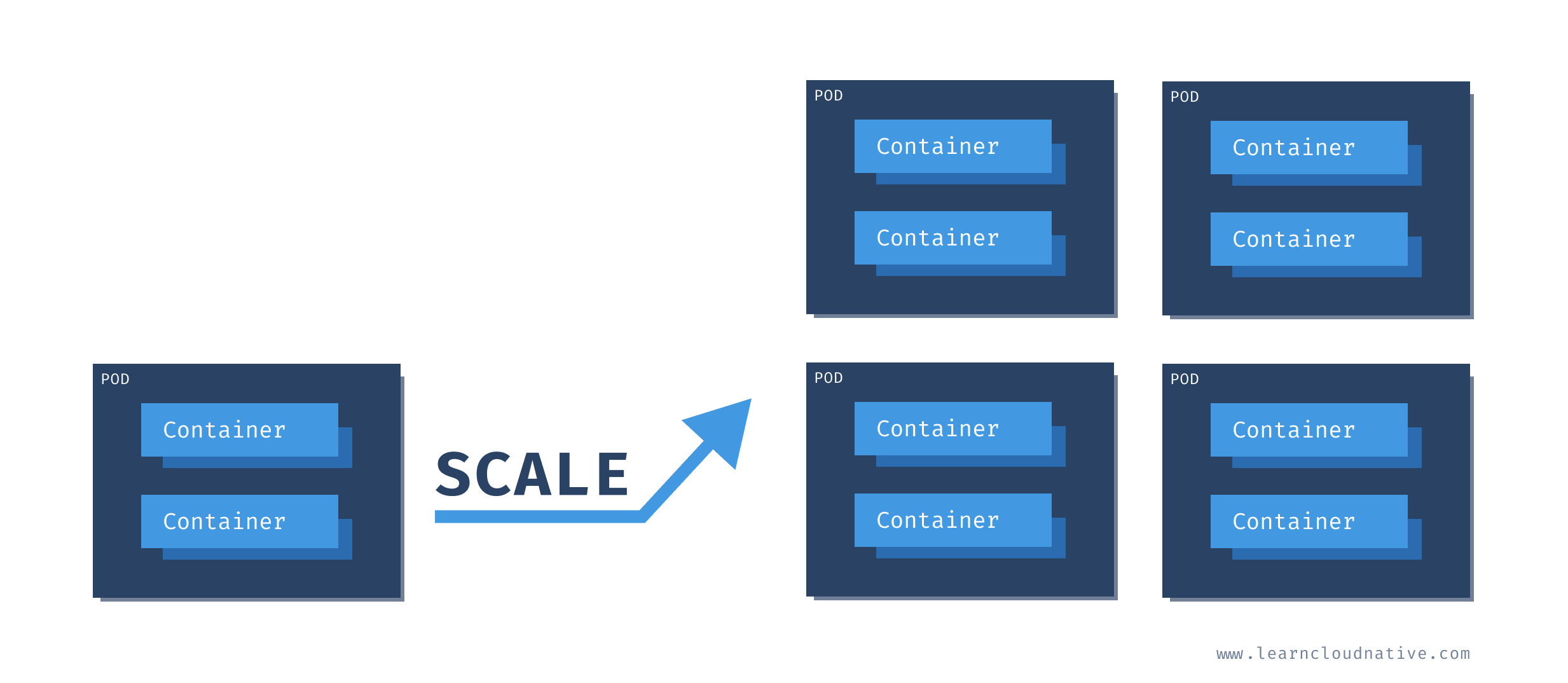

What are ReplicaSets?

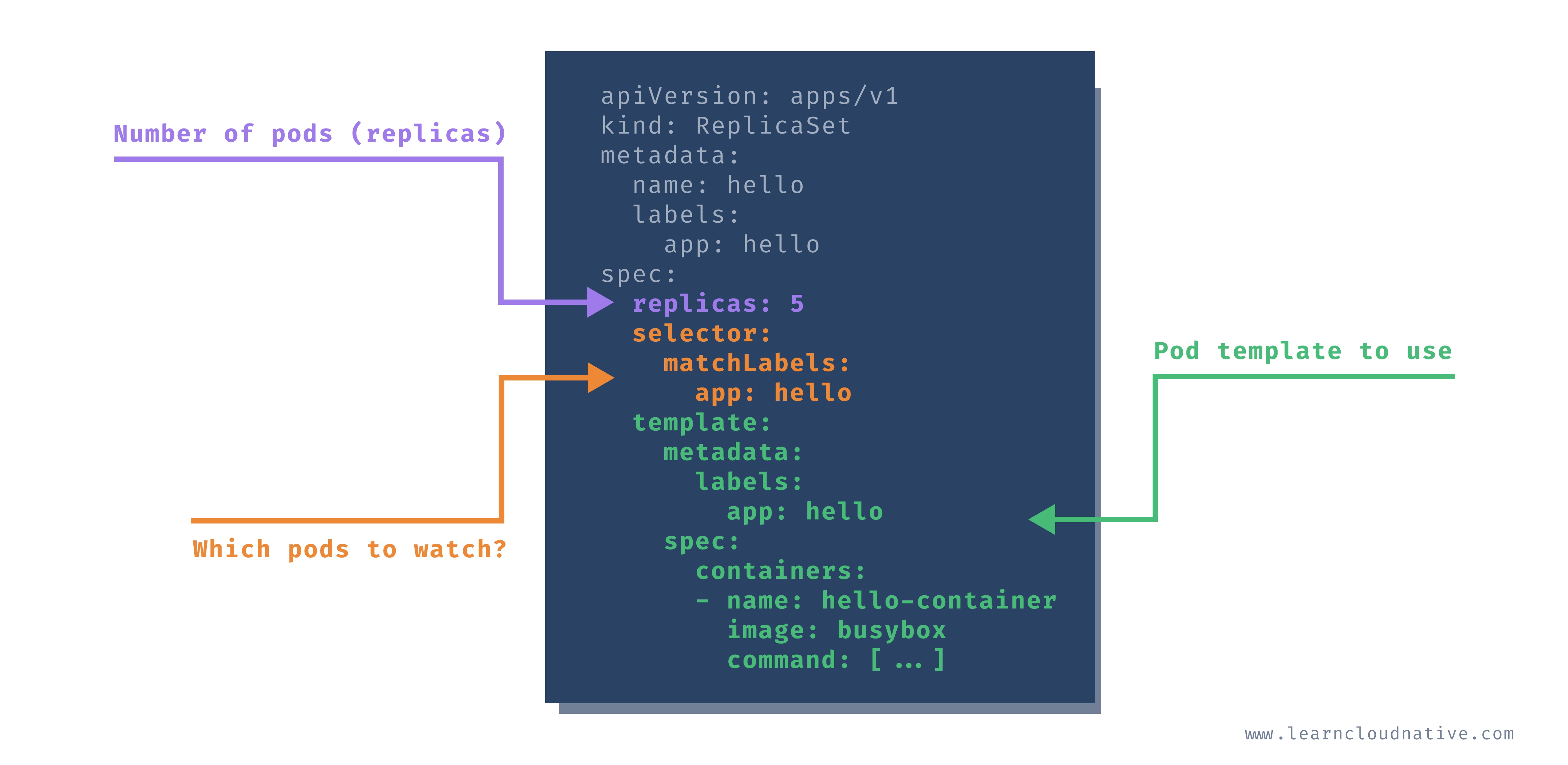

replicas field in the resource definition.apiVersion: apps/v1

kind: ReplicaSet

metadata:

name: hello

labels:

app: hello

spec:

replicas: 5

selector:

matchLabels:

app: hello

template:

metadata:

labels:

app: hello

spec:

containers:

- name: hello-container

image: busybox

command: ['sh', '-c', 'echo Hello from my container! && sleep 3600']

metadata.ownerReferences field. This field specifies which ReplicaSet owns the Pod. If any of the Pods owned by the ReplicaSet is deleted, the ReplicaSet nows about it and acts accordingly (i.e. re-creates the Pod).selector object and matchLabel to check for any new Pods that it might own. If there's a new Pod that matches the selector labels the ReplicaSet and it doesn't have an owner reference or the owner is not a controller (i.e. if we manually create a Pod), the ReplicaSet will take it over and start controlling it.

replicaset.yaml file and run:$ kubectl create -f replicaset.yaml

replicaset.apps/hello created

$ kubectl get replicaset

NAME DESIRED CURRENT READY AGE

hello 5 5 5 30s

$ kubectl get po

NAME READY STATUS RESTARTS AGE

hello-dwx89 1/1 Running 0 31s

hello-fchvr 1/1 Running 0 31s

hello-fl6hd 1/1 Running 0 31s

hello-n667q 1/1 Running 0 31s

hello-rftkf 1/1 Running 0 31s

kubectl get po -l=app=hello, you will get all Pods that have app=hello label set. This, at the moment, is the same 5 Pods we created.-o yaml flag to get the YAML representation of any object in Kubernetes. Once we get the YAML, we will search for the ownerReferences string:$ kubectl get po hello-dwx89 -o yaml | grep -A5 ownerReferences

...

ownerReferences:

- apiVersion: apps/v1

blockOwnerDeletion: true

controller: true

kind: ReplicaSet

name: hello

ownerReferences, the name of the owner is set to hello, and the kind is set to ReplicaSet. This is the ReplicaSet that owns the Pod.Note

Notice how we usedpoin the command to refer to Pods? Some Kubernetes resources have short names that can be used in place of 'full name'. You can usepowhen you meanPodsordeploywhen you meandeployment. To get the full list of supported short names, you can runkubectl api-resources.

hello-pod, because that's what we specified in the YAML. This time, when using the ReplicaSet, Pods are created using the combination of the ReplicaSet name (hello) and a semi-random string such as dwx89, fchrv and so on.Note

Semi-random? Yes, vowels and numbers 0,1, and 3 were removed to avoid creating 'bad words'.

delete keyword followed by the resource (e.g. pod) and the resource name hello-dwx89:$ kubectl delete po hello-dwx89

pod "hello-dwx89" deleted

kubectl get pods again. Did you notice something? There are still 5 Pods running.$ kubectl get po

NAME READY STATUS RESTARTS AGE

hello-fchvr 1/1 Running 0 14m

hello-fl6hd 1/1 Running 0 14m

hello-n667q 1/1 Running 0 14m

hello-rftkf 1/1 Running 0 14m

hello-vctkh 1/1 Running 0 48s

AGE column you will notice four Pods that were created 14 minutes ago and one that was created recently. This is the doing of the replica set. When we deleted one Pod, the number of actual replicas decreased from 5 to 4. The replica set controller detected that and created a new Pod to match the desired number of replicas (5).app=hello).apiVersion: v1

kind: Pod

metadata:

name: stray-pod

labels:

app: hello

spec:

containers:

- name: stray-pod-container

image: busybox

command: ['sh', '-c', 'echo Hello from stray pod! && sleep 3600']

stray-pod.yaml file, then create the Pod by running:$ kubectl create -f stray-pod.yaml

pod/stray-pod created

kubectl get pods you will notice that the stray-pod is nowhere to be seen. What happened?stray-pod with the label (app=hello) matching the selector label on the ReplicaSet, the ReplicaSet took that new Pod for its own. Remember, manually created Pod didn't have the owner. With this new Pod under ReplicaSets' management, the number of replicas was six and not five as stated in the ReplicaSet. Therefore, the ReplicaSet did what it's supposed to do, it deleted the new Pod to maintain the five replicas.Zero-downtime updates?

busybox to busybox:1.31.1. We could use kubectl edit rs hello to open the replica set YAML in the editor, then update the image value.$ kubectl describe po hello-fchvr | grep image

Normal Pulling 14m kubelet, docker-desktop Pulling image "busybox"

Normal Pulled 13m kubelet, docker-desktop Successfully pulled image "busybox"

busybox image, but there's no sign of the busybox:1.31.1 anywhere. Let's see what happens if we delete this same Pod:$ kubectl delete po hello-fchvr

pod "hello-fchvr" deleted

hello-q8fnl in our case) to match the desired replica count. If we run describe against the new Pod that came up, you will notice how the image is changed this time:$ kubectl describe po hello-q8fnl | grep image

Normal Pulling 74s kubelet, docker-desktop Pulling image "busybox:1.31"

Normal Pulled 73s kubelet, docker-desktop Successfully pulled image "busybox:1.31"

busybox). The ReplicaSet would start new Pods and this time the Pods would use the new image busybox:1.31.1.kubectl delete rs hello. rs is the short name for replicaset. If you list the Pods (kubectl get po) right after you issued the delete command you will see the Pods being terminated:NAME READY STATUS RESTARTS AGE

hello-fchvr 1/1 Terminating 0 18m

hello-fl6hd 1/1 Terminating 0 18m

hello-n667q 1/1 Terminating 0 18m

hello-rftkf 1/1 Terminating 0 18m

hello-vctkh 1/1 Terminating 0 7m39s

Deployments

apiVersion: apps/v1

kind: Deployment

metadata:

name: hello

labels:

app: hello

spec:

replicas: 5

selector:

matchLabels:

app: hello

template:

metadata:

labels:

app: hello

spec:

containers:

- name: hello-container

image: busybox

command: ['sh', '-c', 'echo Hello from my container! && sleep 3600']

deployment.yaml and create the deployment:$ kubectl create -f deployment.yaml --record

deployment.apps/hello created

Note

Why the--recordflag? Using this flag we are telling Kubernetes to store the command we executed in the annotation calledkubernetes.io/change-cause. This is useful to track the changes or commands that were executed when the deployment was updated. You will see this in action later on when we do rollouts.

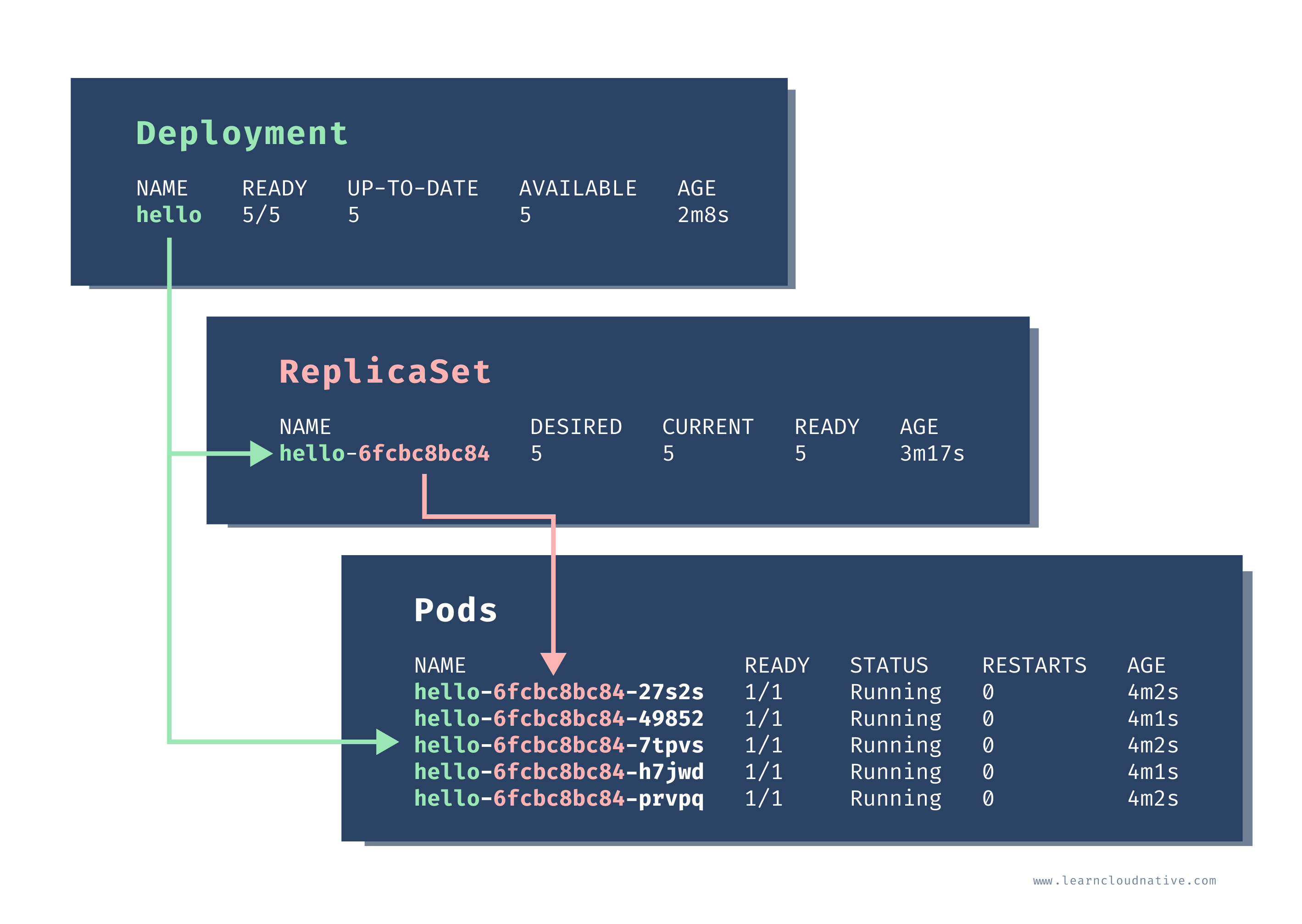

get command:$ kubectl get deployment

NAME READY UP-TO-DATE AVAILABLE AGE

hello 5/5 5 5 2m8s

$ kubectl get rs

NAME DESIRED CURRENT READY AGE

hello-6fcbc8bc84 5 5 5 3m17s

$ kubectl get po

NAME READY STATUS RESTARTS AGE

hello-6fcbc8bc84-27s2s 1/1 Running 0 4m2s

hello-6fcbc8bc84-49852 1/1 Running 0 4m1s

hello-6fcbc8bc84-7tpvs 1/1 Running 0 4m2s

hello-6fcbc8bc84-h7jwd 1/1 Running 0 4m1s

hello-6fcbc8bc84-prvpq 1/1 Running 0 4m2s

hello-fchvr. However, this time, the Pod names are a bit longer - hello-6fcbc8bc84-27s2s. The middle random section in the name 6fcbc8bc84 corresponds to the random section of the ReplicaSet name and the Pod names are created by combining the deployment name, ReplicaSet name and a random string.

$ kubectl delete po hello-6fcbc8bc84-27s2s

pod "hello-6fcbc8bc84-27s2s" deleted

$ kubectl get po

NAME READY STATUS RESTARTS AGE

hello-6fcbc8bc84-49852 1/1 Running 0 46m

hello-6fcbc8bc84-58q7l 1/1 Running 0 15s

hello-6fcbc8bc84-7tpvs 1/1 Running 0 46m

hello-6fcbc8bc84-h7jwd 1/1 Running 0 46m

hello-6fcbc8bc84-prvpq 1/1 Running 0 46m

How to scale the Pods up or down?

scale. Using this command we can scale up (or down) the number of Pods controlled by the Deployment or a ReplicaSet.$ kubectl scale deployment hello --replicas=3

deployment.apps/hello scaled

$ kubectl get po

NAME READY STATUS RESTARTS AGE

hello-6fcbc8bc84-49852 1/1 Running 0 48m

hello-6fcbc8bc84-7tpvs 1/1 Running 0 48m

hello-6fcbc8bc84-h7jwd 1/1 Running 0 48m

$ kubectl scale deployment hello --replicas=5

deployment.apps/hello scaled

$ kubectl get po

NAME READY STATUS RESTARTS AGE

hello-6fcbc8bc84-49852 1/1 Running 0 49m

hello-6fcbc8bc84-7tpvs 1/1 Running 0 49m

hello-6fcbc8bc84-h7jwd 1/1 Running 0 49m

hello-6fcbc8bc84-kmmzh 1/1 Running 0 6s

hello-6fcbc8bc84-wfh8c 1/1 Running 0 6s

Updating Pod templates

set image command to update the image in the Pod templates from busybox to busybox:1.31.1.Note

The commandsetcan be used to update the parts of the Pod template, such as image name, environment variables, resources, and a couple of others.

$ kubectl set image deployment hello hello-container=busybox:1.31.1 --record

deployment.apps/hello image updated

kubectl get pods right after you execute the set command, you might see something like this:$ kubectl get po

NAME READY STATUS RESTARTS AGE

hello-6fcbc8bc84-49852 1/1 Terminating 0 57m

hello-6fcbc8bc84-7tpvs 0/1 Terminating 0 57m

hello-6fcbc8bc84-h7jwd 1/1 Terminating 0 57m

hello-6fcbc8bc84-kmmzh 0/1 Terminating 0 7m15s

hello-84f59c54cd-8khwj 1/1 Running 0 36s

hello-84f59c54cd-fzcf2 1/1 Running 0 32s

hello-84f59c54cd-k947l 1/1 Running 0 33s

hello-84f59c54cd-r8cv7 1/1 Running 0 36s

hello-84f59c54cd-xd4hb 1/1 Running 0 35s

--record flag we set? We can now use rollout history command to view the previous rollouts.$ kubectl rollout history deploy hello

deployment.apps/hello

REVISION CHANGE-CAUSE

1 kubectl create --filename=deployment.yaml --record=true

2 kubectl set image deployment hello hello-container=busybox:1.31.1 --record=true

rollout command we can also roll back to a previous revision of the resource.rollout undo command, like this:$ kubectl rollout undo deploy hello

deployment.apps/hello rolled back

$ kubectl rollout history deploy hello

deployment.apps/hello

REVISION CHANGE-CAUSE

2 kubectl set image deployment hello hello-container=busybox:1.31.1 --record=true

3 kubectl create --filename=deployment.yaml --record=true

$ kubectl delete deploy hello

deployment.apps "hello" deleted

Deployment strategies

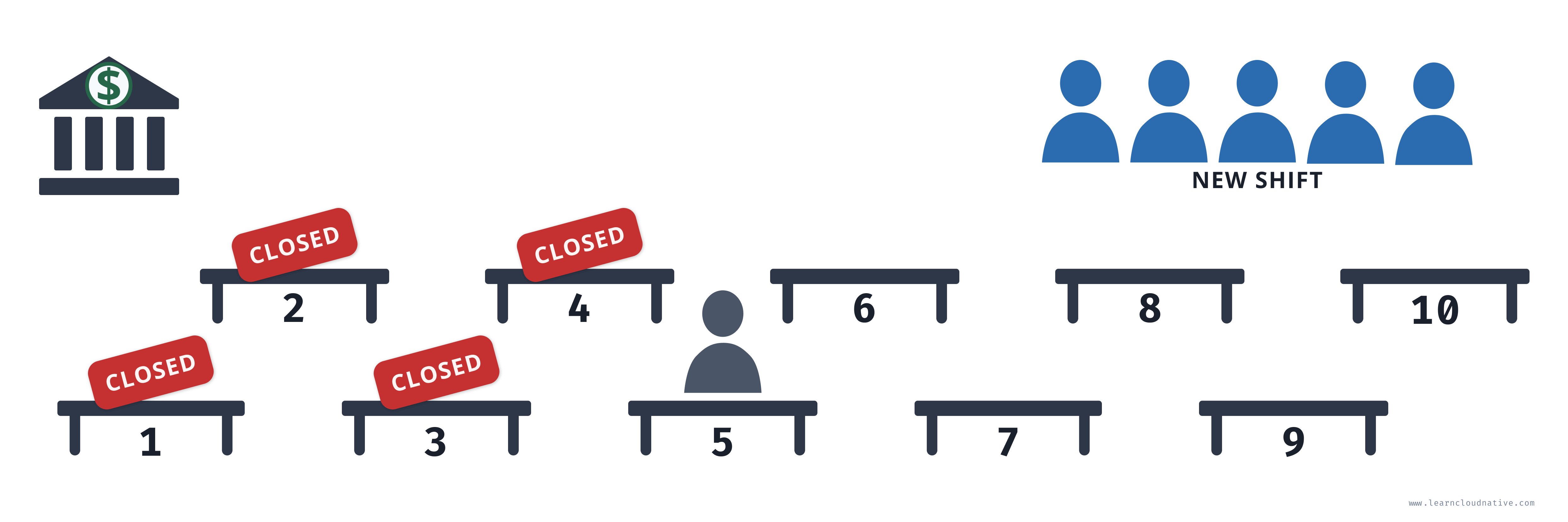

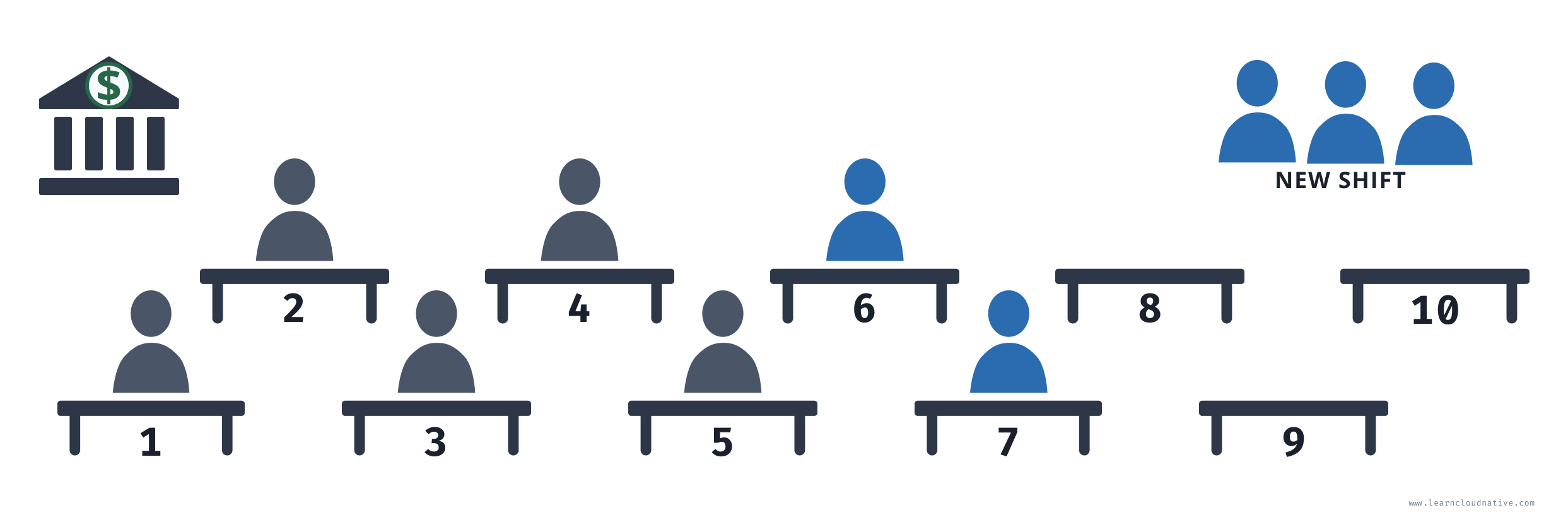

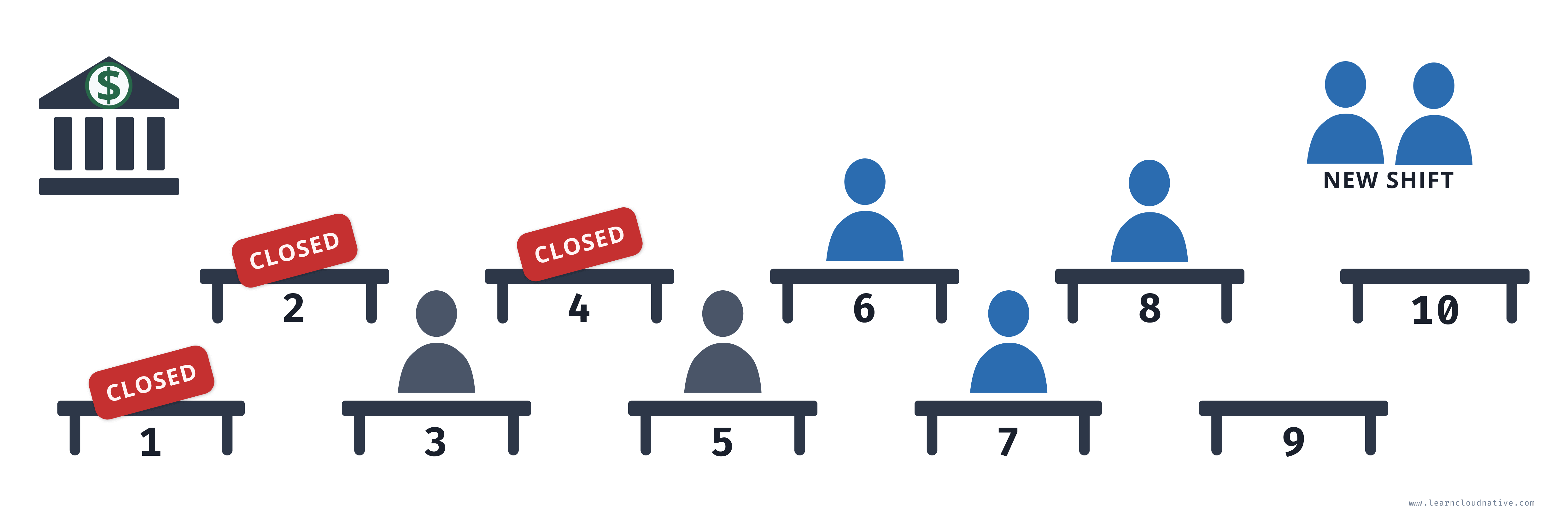

maxUnavailable and maxSurge settings specify the maximum number of Pods that can be unavailable and maximum number of old and new Pods that can be running at the same time.Recreate strategy

apiVersion: apps/v1

kind: Deployment

metadata:

name: hello

labels:

app: hello

spec:

replicas: 5

strategy:

type: Recreate

selector:

matchLabels:

app: hello

template:

metadata:

labels:

app: hello

spec:

containers:

- name: hello-container

image: busybox

command: ['sh', '-c', 'echo Hello from my container! && sleep 3600']

deployment-recreate.yaml file and create it:kubectl create -f deployment-recreate.yaml

--watch flag when listing all Pods - the --watch flag will keep the command running and any changes to the Pods will be outputted to the screen.kubectl get pods --watch

busybox to busybox:1.31.1 by running:kubectl set image deployment hello hello-container=busybox:1.31.1

NAME READY STATUS RESTARTS AGE

hello-6fcbc8bc84-jpm64 1/1 Running 0 54m

hello-6fcbc8bc84-wsw6s 1/1 Running 0 54m

hello-6fcbc8bc84-wwpk2 1/1 Running 0 54m

hello-6fcbc8bc84-z2dqv 1/1 Running 0 54m

hello-6fcbc8bc84-zpc5q 1/1 Running 0 54m

hello-6fcbc8bc84-z2dqv 1/1 Terminating 0 56m

hello-6fcbc8bc84-wwpk2 1/1 Terminating 0 56m

hello-6fcbc8bc84-wsw6s 1/1 Terminating 0 56m

hello-6fcbc8bc84-jpm64 1/1 Terminating 0 56m

hello-6fcbc8bc84-zpc5q 1/1 Terminating 0 56m

hello-6fcbc8bc84-wsw6s 0/1 Terminating 0 56m

hello-6fcbc8bc84-z2dqv 0/1 Terminating 0 56m

hello-6fcbc8bc84-zpc5q 0/1 Terminating 0 56m

hello-6fcbc8bc84-jpm64 0/1 Terminating 0 56m

hello-6fcbc8bc84-wwpk2 0/1 Terminating 0 56m

hello-6fcbc8bc84-z2dqv 0/1 Terminating 0 56m

hello-84f59c54cd-77hpt 0/1 Pending 0 0s

hello-84f59c54cd-77hpt 0/1 Pending 0 0s

hello-84f59c54cd-9st7n 0/1 Pending 0 0s

hello-84f59c54cd-lxqrn 0/1 Pending 0 0s

hello-84f59c54cd-9st7n 0/1 Pending 0 0s

hello-84f59c54cd-lxqrn 0/1 Pending 0 0s

hello-84f59c54cd-z9s5s 0/1 Pending 0 0s

hello-84f59c54cd-8f2pt 0/1 Pending 0 0s

hello-84f59c54cd-77hpt 0/1 ContainerCreating 0 0s

hello-84f59c54cd-z9s5s 0/1 Pending 0 0s

hello-84f59c54cd-8f2pt 0/1 Pending 0 0s

hello-84f59c54cd-9st7n 0/1 ContainerCreating 0 1s

hello-84f59c54cd-lxqrn 0/1 ContainerCreating 0 1s

hello-84f59c54cd-z9s5s 0/1 ContainerCreating 0 1s

hello-84f59c54cd-8f2pt 0/1 ContainerCreating 0 1s

hello-84f59c54cd-77hpt 1/1 Running 0 3s

hello-84f59c54cd-lxqrn 1/1 Running 0 4s

hello-84f59c54cd-9st7n 1/1 Running 0 5s

hello-84f59c54cd-8f2pt 1/1 Running 0 6s

hello-84f59c54cd-z9s5s 1/1 Running 0 7s

kubectl delete deploy hello before continuing. You can also press CTRL+C to stop running the --watch command from the second terminal window (keep the window open as we will use it again shortly).Rolling update strategy

maxUnavailable and maxSurge. Both of these settings are optional and have the default values set - 25% for both settings.maxUnavailable setting specifies the maximum number of Pods that can be unavailable during the rollout process. You can set it to an actual number or a percentage of desired Pods.maxUnavailable is set to 40%. When the update starts, the old ReplicaSet is scaled down to 60%. As soon as new Pods are started and ready, the old ReplicaSet is scaled down again and the new ReplicaSet is scaled up. This happens in such a way that the total number of available Pods (old and new, since we are scaling up and down) is always at least 60%.maxSurge setting specifies the maximum number of Pods that can be created over the desired number of Pods. If we use the same percentage as before (40%), the new ReplicaSet is scaled up right away when the rollout starts. The new ReplicaSet will be scaled up in such a way that it does not exceed 140% of desired Pods. As old Pods get killed, the new ReplicaSet scales up again, making sure it never goes over the 140% of desired Pods.RollingUpdate strategy.apiVersion: apps/v1

kind: Deployment

metadata:

name: hello

labels:

app: hello

spec:

replicas: 10

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 40%

maxSurge: 40%

selector:

matchLabels:

app: hello

template:

metadata:

labels:

app: hello

spec:

containers:

- name: hello-container

image: busybox

command: ['sh', '-c', 'echo Hello from my container! && sleep 3600']

deployment-rolling.yaml and create the deployment:$ kubectl create -f deployment-rolling.yaml

deployment.apps/hello created

kubectl get po --watch from the second terminal window to start watching the Pods.kubectl set image deployment hello hello-container=busybox:1.31.1

$ kubectl get po --watch

NAME READY STATUS RESTARTS AGE

hello-6fcbc8bc84-4xnt7 1/1 Running 0 37s

hello-6fcbc8bc84-bpsxj 1/1 Running 0 37s

hello-6fcbc8bc84-dx4cg 1/1 Running 0 37s

hello-6fcbc8bc84-fx7ll 1/1 Running 0 37s

hello-6fcbc8bc84-fxsp5 1/1 Running 0 37s

hello-6fcbc8bc84-jhb29 1/1 Running 0 37s

hello-6fcbc8bc84-k8dh9 1/1 Running 0 37s

hello-6fcbc8bc84-qlt2q 1/1 Running 0 37s

hello-6fcbc8bc84-wx4v7 1/1 Running 0 37s

hello-6fcbc8bc84-xkr4x 1/1 Running 0 37s

hello-84f59c54cd-ztfg4 0/1 Pending 0 0s

hello-84f59c54cd-ztfg4 0/1 Pending 0 0s

hello-84f59c54cd-mtwcc 0/1 Pending 0 0s

hello-84f59c54cd-x7rww 0/1 Pending 0 0s

hello-6fcbc8bc84-dx4cg 1/1 Terminating 0 46s

hello-6fcbc8bc84-fx7ll 1/1 Terminating 0 46s

hello-6fcbc8bc84-bpsxj 1/1 Terminating 0 46s

hello-6fcbc8bc84-jhb29 1/1 Terminating 0 46s

...

kubectl delete deploy hello

Services

apiVersion: apps/v1

kind: Deployment

metadata:

name: web-frontend

labels:

app: web-frontend

spec:

replicas: 1

selector:

matchLabels:

app: web-frontend

template:

metadata:

labels:

app: web-frontend

spec:

containers:

- name: web-frontend-container

image: learncloudnative/helloworld:0.1.0

ports:

- containerPort: 3000

ports section. Using the containerPort field we can set the port number that our website server is listening on. The learncloudnative/helloworld:0.1.0 is a simple Node.js Express application.web-frontend.yaml and create the deployment:$ kubectl create -f web-frontend.yaml

deployment.apps/web-frontend created

kubectl get pods to ensure Pod is up and running and then get the logs from the container:$ kubectl get po

NAME READY STATUS RESTARTS AGE

web-frontend-68f784d855-rdt97 1/1 Running 0 65s

$ kubectl logs web-frontend-68f784d855-rdt97

> helloworld@1.0.0 start /app

> node server.js

Listening on port 3000

3000. If we set the output flag to gives up the wide output (-o wide), you'll notice the Pods' IP address - 10.244.0.170:$ kubectl get po -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

web-frontend-68f784d855-rdt97 1/1 Running 0 65s 10.244.0.170 docker-desktop <none> <none>

$ kubectl delete po web-frontend-68f784d855-rdt97

pod "web-frontend-68f784d855-rdt97" deleted

$ kubectl get po -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

web-frontend-68f784d855-8c76m 1/1 Running 0 7s 10.244.0.171 docker-desktop <none> <none>

$ kubectl scale deploy web-frontend --replicas=4

deployment.apps/web-frontend scaled

$ kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

web-frontend-68f784d855-8c76m 1/1 Running 0 5m23s 10.244.0.171 docker-desktop <none> <none>

web-frontend-68f784d855-jrqq4 1/1 Running 0 18s 10.244.0.172 docker-desktop <none> <none>

web-frontend-68f784d855-mftl6 1/1 Running 0 18s 10.244.0.173 docker-desktop <none> <none>

web-frontend-68f784d855-stfqj 1/1 Running 0 18s 10.244.0.174 docker-desktop <none> <none>

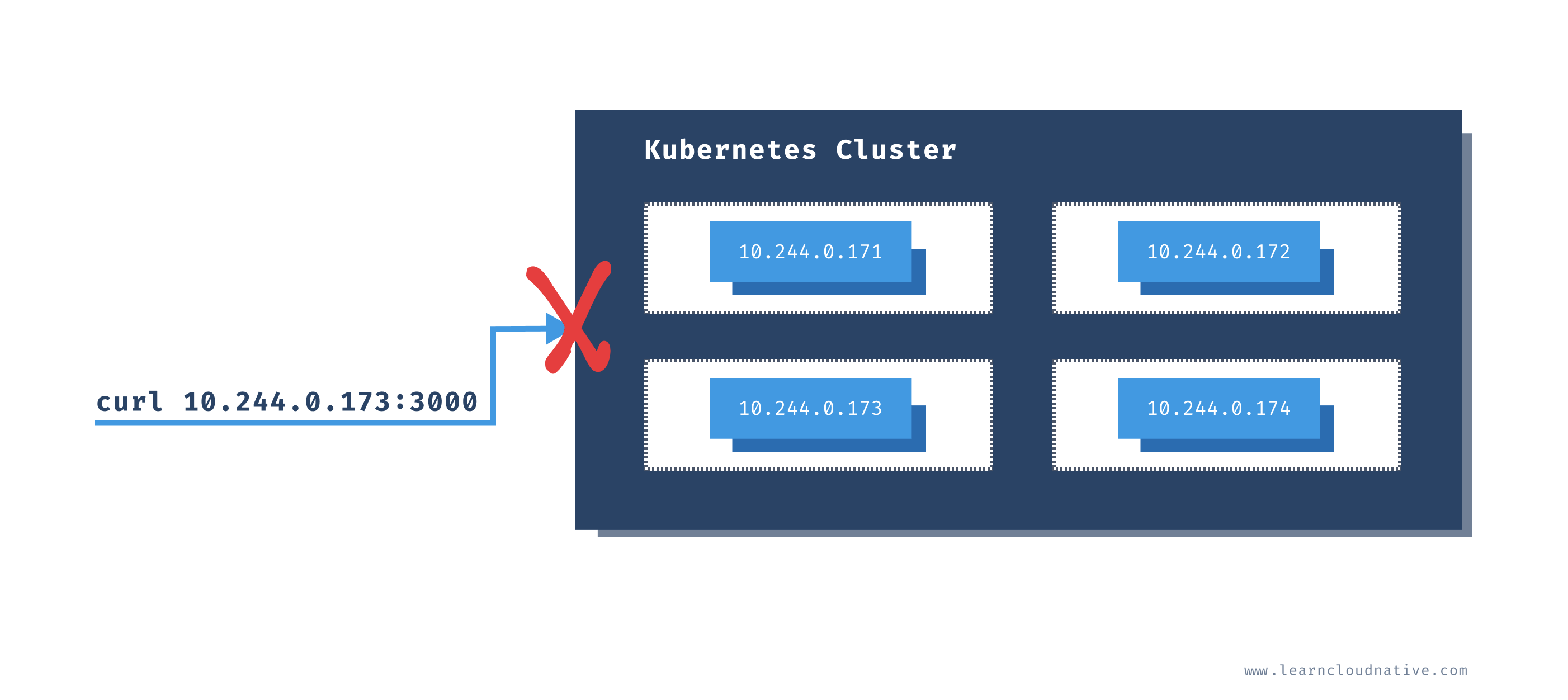

How to access the Pods without a service?

$ curl -v 10.244.0.173:3000

* Trying 10.244.0.173...

* TCP_NODELAY set

* Connection failed

* connect to 10.244.0.173 port 3000 failed: Network is unreachable

* Failed to connect to 10.244.0.173 port 3000: Network is unreachable

* Closing connection 0

curl: (7) Failed to connect to 10.244.0.173 port 3000: Network is unreachable

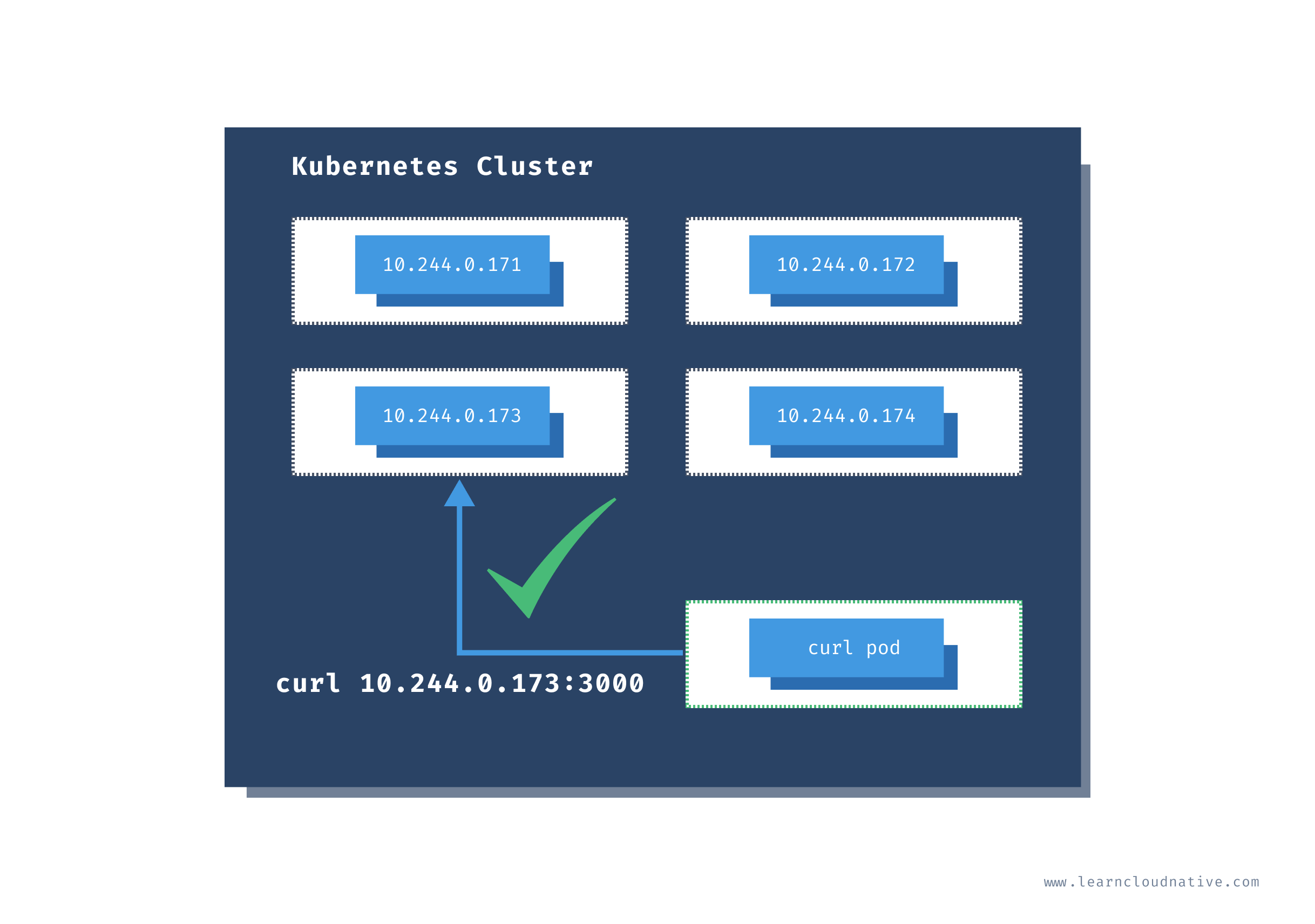

radialbusyboxplus:curl is the image I frequently run inside the cluster in case I need to check something or debug things. Using the -i and --tty flags we are want to allocate a terminal (tty) and that we want an interactive session, so we can run commands directly inside the container.curl, but you can name it whatever you like:$ kubectl run curl --image=radial/busyboxplus:curl -i --tty

If you don't see a command prompt, try pressing enter.

[ root@curl:/ ]$

[ root@curl:/ ]$ curl -v 10.244.0.173:3000

> GET / HTTP/1.1

> User-Agent: curl/7.35.0

> Host: 10.244.0.173:3000

> Accept: */*

>

< HTTP/1.1 200 OK

< X-Powered-By: Express

< Content-Type: text/html; charset=utf-8

< Content-Length: 111

< ETag: W/"6f-U4ut6Q03D1uC/sbzBDyZfMqFSh0"

< Date: Wed, 20 May 2020 22:10:49 GMT

< Connection: keep-alive

<

<link rel="stylesheet" type="text/css" href="css/style.css" />

<div class="container">

Hello World!

</div>[ root@curl:/ ]$

exit to return to your terminal. The curl Pod will continue to run and to access it again, you can use the attach command:kubectl attach curl -c curl -i -t

Note

Note that you can get a terminal session to any container running inside the cluster using the attach command.

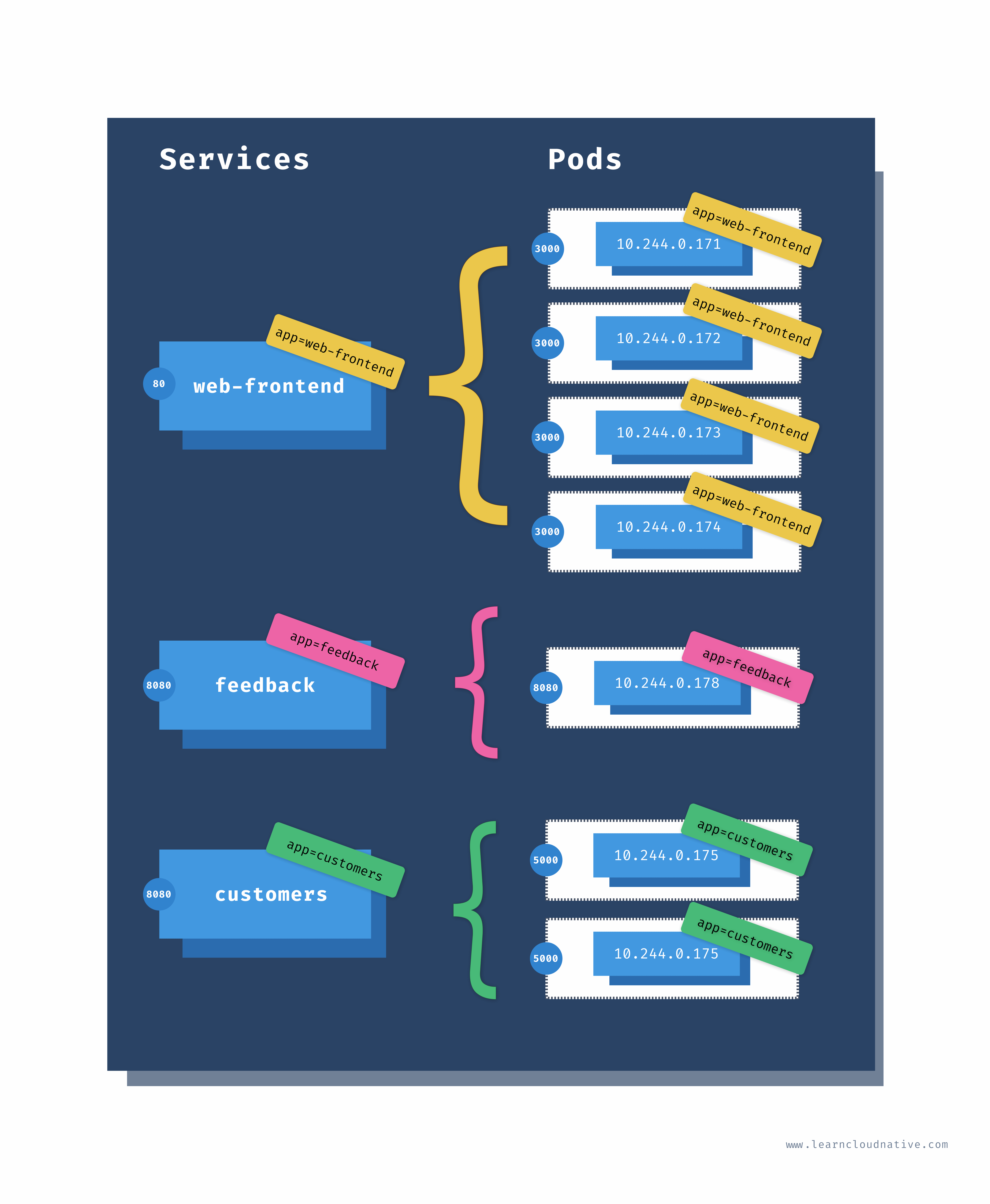

Accessing Pods using a service

kind: Service

apiVersion: v1

metadata:

name: web-frontend

labels:

app: web-frontend

spec:

selector:

app: web-frontend

ports:

- port: 80

name: http

targetPort: 3000

kind field, it's the same as we saw with the Pods, ReplicaSets, Deployments.selector section is where we define the labels that Service uses to query the Pods. If you go back to the Deployment YAML you will notice that the Pods have this exact label set as well.

ports field we are defining the port where the service will be accessible on (80) and with the targetPort we are telling the service on which port it can access the Pods. The targetPort value matches the containerPort value in the Deployment YAML.web-frontend-service.yaml file and deploy it:$ kubectl create -f web-frontend-service.yaml

service/web-frontend created

get service command:$ kubectl get service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 7d

web-frontend ClusterIP 10.100.221.29 <none> 80/TCP 24s

web-frontend service has an IP address that will not change (assuming you don't delete the service) and can be used to reliably access the underlying Pods.curl container we started before and try to access the Pods through the service:$ kubectl attach curl -c curl -i -t

If you don't see a command prompt, try pressing enter.

[ root@curl:/ ]$

80, we can curl directly to the service IP and we will get back the same response as previously:[ root@curl:/ ]$ curl 10.100.221.29

<link rel="stylesheet" type="text/css" href="css/style.css" />

<div class="container">

Hello World!

</div>

service-name.namespace.svc.cluster-domain.sample.default namespace and the cluster domain is cluster.local we can access the service using web-frontend.default.svc.cluster.local:[ root@curl:/ ]$ curl web-frontend.default.svc.cluster.local

<link rel="stylesheet" type="text/css" href="css/style.css" />

<div class="container">

Hello World!

</div>

</div>[ root@curl:/ ]$ curl web-frontend

<link rel="stylesheet" type="text/css" href="css/style.css" />

<div class="container">

Hello World!

[ root@curl:/ ]$ curl web-frontend.default

<link rel="stylesheet" type="text/css" href="css/style.css" />

<div class="container">

Hello World!

</div>

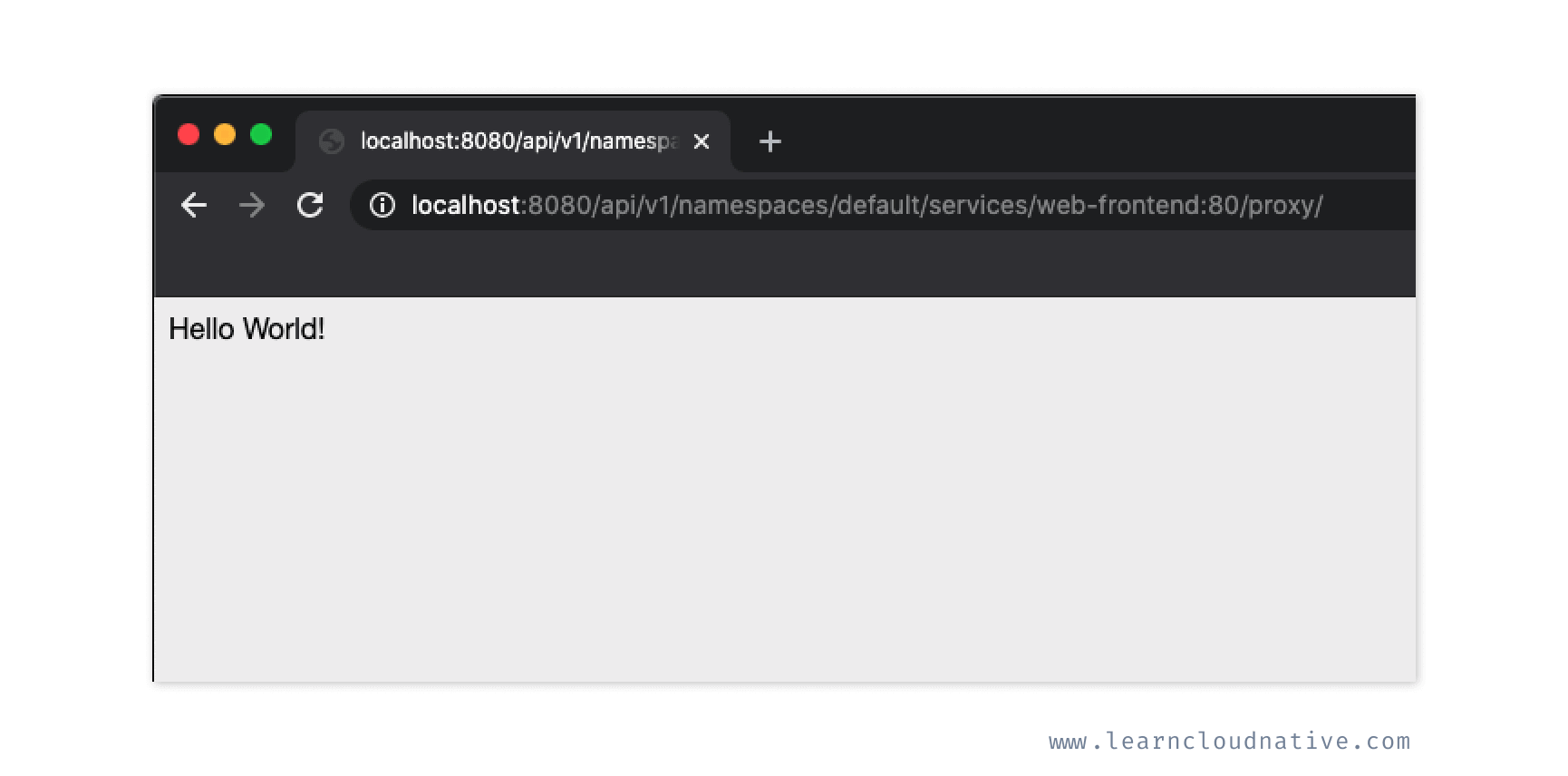

Using the Kubernetes proxy

kubectl proxy command creates a gateway between your local computer (localhost) and the Kubernetes API server.localhost:8080 to the Kubernetes API inside the cluster:kubectl proxy --port=8080

http://localhost:8080/ in your browser, you will see the list of all APIs from the Kubernetes API proxy:{

"paths": [

"/api",

"/api/v1",

"/apis",

"/apis/",

"/apis/admissionregistration.k8s.io",

"/apis/admissionregistration.k8s.io/v1",

"/apis/admissionregistration.k8s.io/v1beta1",

...

http://localhost:8080/api/v1/pods or navigate to http://localhost:8080/api/v1/namespaces to see all namespaces.web-frontend service we deployed. So, instead of running a Pod inside the cluster and make cURL requests to the service or Pods, you can create the proxy server and use the following URL to access the service:http://localhost:8080/api/v1/namespaces/default/services/web-frontend:80/proxy/

Note

The URL format to access a service ishttp://localhost:[PORT]/api/v1/namespaces/[NAMESPACE]/services/[SERVICE_NAME]:[SERVICE_PORT]/proxy. In addition to using the service port (e.g.80) you can also name your ports an use the port name instead (e.g.http).

Hello World message.

Viewing service details

describe command, you can describe an object in Kubernetes and look at its properties. For example, let's take a look at the details of the web-frontend service we deployed:$ kubectl describe svc web-frontend

Name: web-frontend

Namespace: default

Labels: app=web-frontend

Annotations: Selector: app=web-frontend

Type: ClusterIP

IP: 10.100.221.29

Port: http 80/TCP

TargetPort: 3000/TCP

Endpoints: 10.244.0.171:3000,10.244.0.172:3000,10.244.0.173:3000 + 1 more...

Session Affinity: None

Events: <none>

get command does - it shows the labels, selector, the service type, the IP, and the ports. Additionally, you will also notice the Endpoints. These IP addresses correspond to the IP addresses of the Pods.get endpoints command:$ kubectl get endpoints

NAME ENDPOINTS AGE

kubernetes 172.18.0.2:6443 8d

web-frontend 10.244.0.171:3000,10.244.0.172:3000,10.244.0.173:3000 + 1 more... 41h

--watch flag to watch the endpoints like this:$ kubectl get endpoints --watch

$ kubectl scale deploy web-frontend --replicas=1

deployment.apps/web-frontend scaled

$ kubectl get endpoints -w

NAME ENDPOINTS AGE

kubernetes 172.18.0.2:6443 8d

web-frontend 10.244.0.171:3000,10.244.0.172:3000,10.244.0.173:3000 + 1 more... 41h

web-frontend 10.244.0.171:3000,10.244.0.172:3000 41h

web-frontend 10.244.0.171:3000 41h

Kubernetes service types

Type: ClusterIP

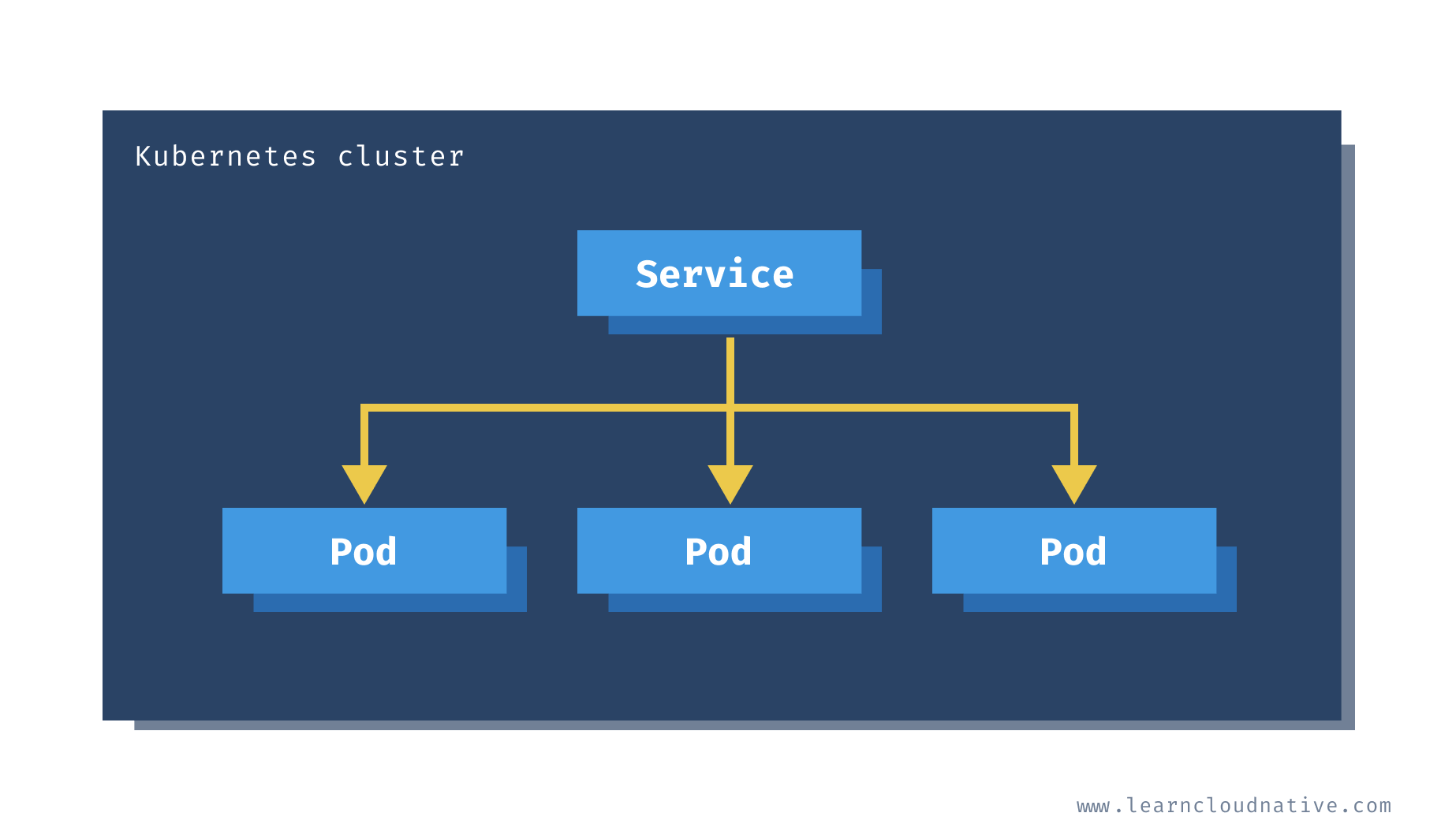

ClusterIP

ports section in the YAML file.

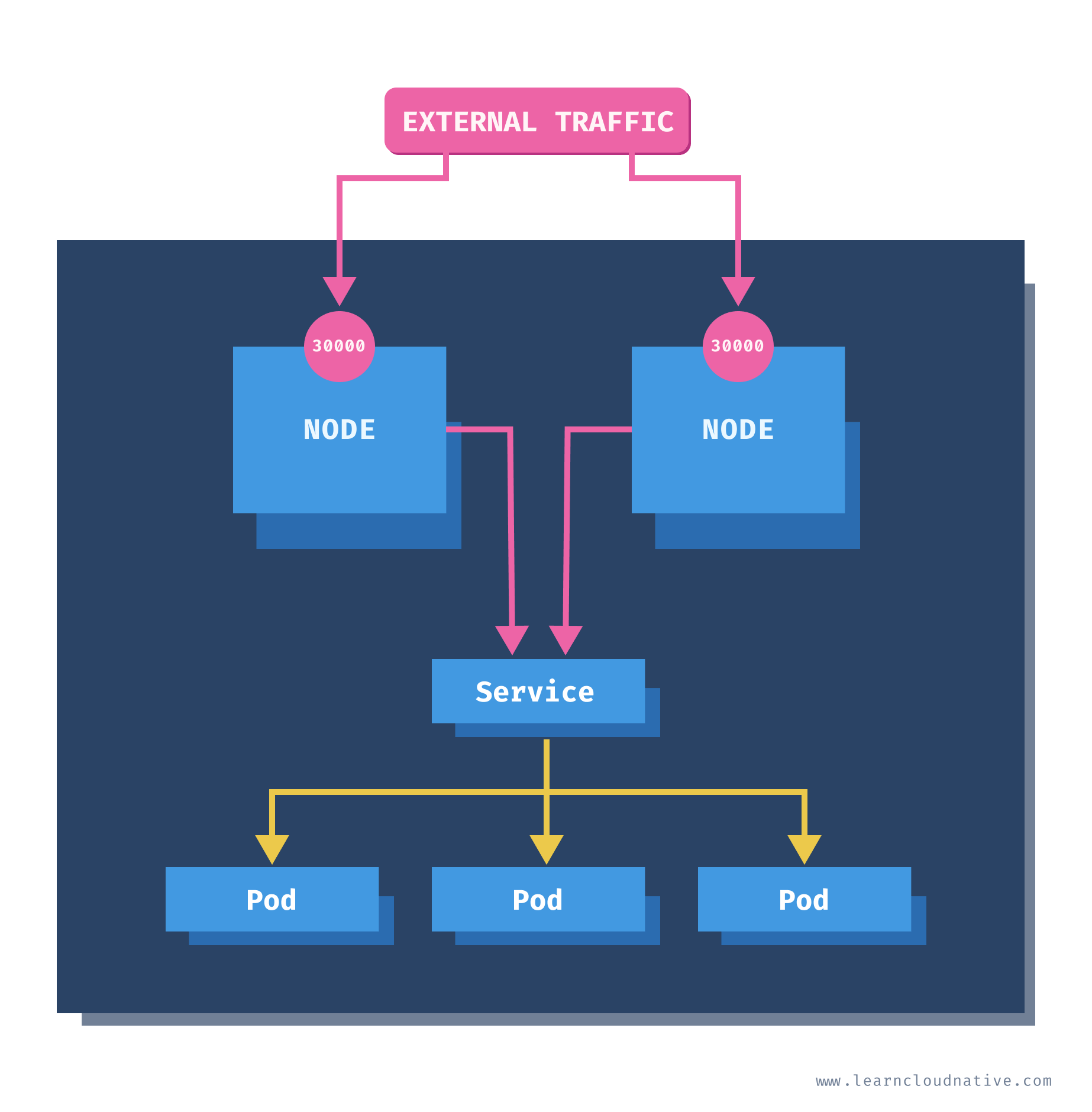

NodePort

30000 as shown in the figure above, you will be able to access the service and the Pods.kind: Service

apiVersion: v1

metadata:

name: web-frontend

labels:

app: web-frontend

spec:

type: NodePort

selector:

app: web-frontend

ports:

- port: 80

name: http

targetPort: 3000

nodePort that can be set under the ports section. However, it is a best practice to leave it out and let Kubernetes pick a port that will be available on all nodes in the cluster. By default, the node port will be allocated between 30000 and 32767 (this is configurable in the API server).web-frontend service and create one that uses NodePort. To delete the previous service, run kubectl delete svc web-frontend. Then, copy the YAML contents above to the web-frontend-nodeport.yaml file and run kubectl create -f web-frontend-nodeport.yaml OR (if you're on Mac/Linux), run the following command to create the resource directly from the command line:cat <<EOF | kubectl create -f -

kind: Service

apiVersion: v1

metadata:

name: web-frontend

labels:

app: web-frontend

spec:

type: NodePort

selector:

app: web-frontend

ports:

- port: 80

name: http

targetPort: 3000

EOF

kubectl get svc - you will notice the service type has changed and the port number is random as well:$ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 8d

web-frontend NodePort 10.99.15.20 <none> 80:31361/TCP 48m

$ kubectl describe node | grep InternalIP

InternalIP: 172.18.0.2

31361):$ kubectl attach curl -c curl -i -t

If you don't see a command prompt, try pressing enter.

[ root@curl:/ ]$ curl 172.18.0.2:31361

<link rel="stylesheet" type="text/css" href="css/style.css" />

<div class="container">

Hello World!

</div>

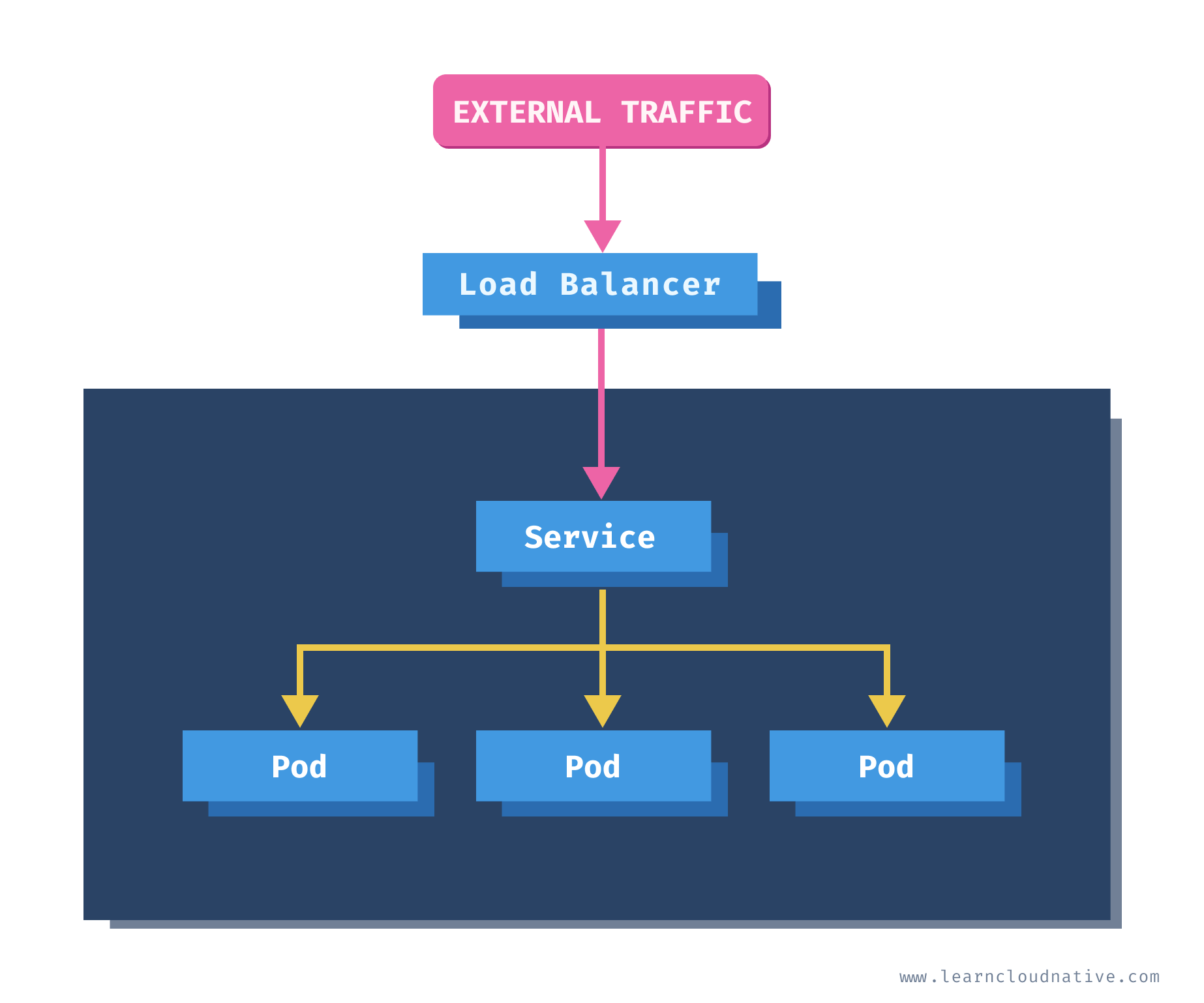

localhost as the node address and the node port to access the service. If you open http://localhost:31361 in your browser, you will be able to access the web-frontend service.LoadBalancer

kubectl delete svc web-frontend and create a service that uses the LoadBalancer type:cat <<EOF | kubectl create -f -

kind: Service

apiVersion: v1

metadata:

name: web-frontend

labels:

app: web-frontend

spec:

type: LoadBalancer

selector:

app: web-frontend

ports:

- port: 80

name: http

targetPort: 3000

EOF

$ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 59d

web-frontend LoadBalancer 10.102.131.150 localhost 80:30832/TCP 2s

Note

When using a cloud-managed Kubernetes cluster, the external IP would be a public, the external IP address you could use to access the service.

http://localhost or http://127.0.0.1 in your browser, you will be taken to the website running inside the cluster.ExternalName

externalName field, instead of the cluster IP of the service.kind: Service

apiVersion: v1

metadata:

name: my-database

spec:

type: ExternalName

externalName: mydatabase.example.com

my-database service to access it from the workloads running inside your cluster.