ArgoCD Best Practices You Should Know

This article contains a set of 10 best practices for Argo Workflows, ArgoCD, and Argo Rollouts.

Argo Best Practices

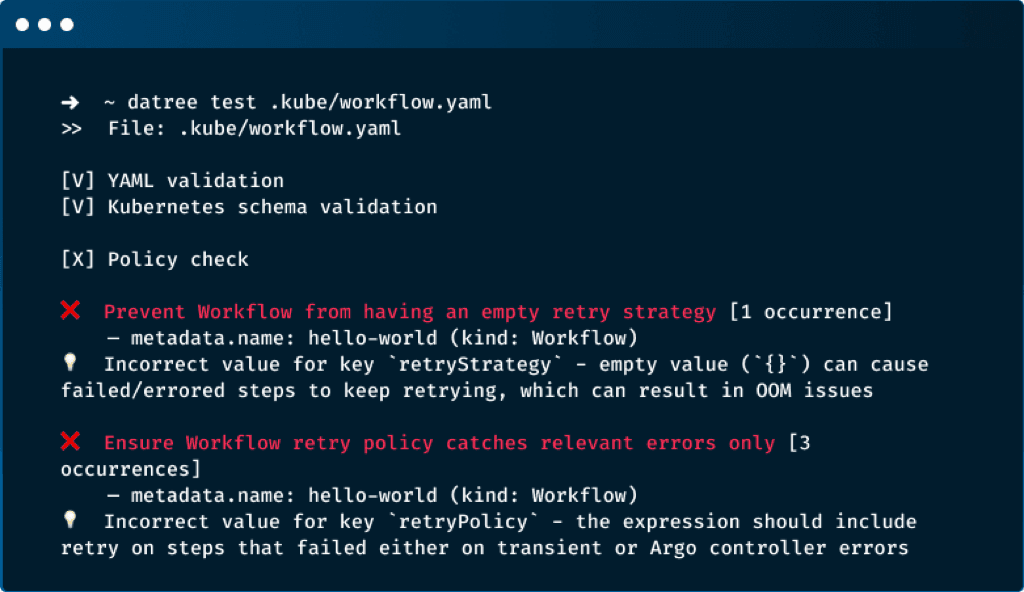

1. Disallow providing an empty retryStrategy (i.e. {})

retryStrategy that will dictate how failed or errored steps are retried in a workflow. Providing an empty retryStrategy (i.e. retryStrategy: {}) will cause a container to retry until completion and eventually cause OOM issues.2. Ensure that Workflow pods are not configured to use the default service account

workflow.spec.serviceAccountName. If omitted, Argo will use the default service account of the workflow's namespace. This provides the workflow (i.e. the pod) the ability to interact with the Kubernetes API server. This allows attackers with access to a single container to abuse Kubernetes by using the AutomountServiceAccountToken. If, by any chance, the option for AutomountServiceAccountToken was disabled, then the default service account that Argo will use won't have any permissions, and the workflow will fail.3. Ensure label part-of: argocd exists for ConfigMaps

app.kubernetes.io/part-of: argocd, won't be used by Argo CD.configMaps. For each specific kind of ConfigMap and Secret resource, there is only a single supported resource name (as listed in the above table) - if you need to merge things, you need to do it before creating them. It's essential to annotate your ConfigMap resources using the label app.kubernetes.io/part-of: argocd; otherwise, Argo CD will not be able to use them.4. Disable with DAG to set FailFast=false

true. If set to false, it will allow a DAG to run all branches of the DAG to completion (either success or failure), regardless of the failed outcomes of branches in the DAG.5. Ensure the Rollout pause step has a configured duration

setWeight and pause. The setWeight field dictates the percentage of traffic that should be sent to the canary, and the pause literally instructs the rollout to pause.pause step for a rollout, it will add a PauseCondition struct to the .status.PauseConditions field. If the duration field within the pause struct is set, the rollout will not progress to the next step until it has waited for the value of the duration field. However, if the duration field has been omitted, the rollout might wait indefinitely until the added pause condition will be removed.6. Specify Rollout's revisionHistoryLimit

.spec.revisionHistoryLimit is an optional field that indicates the number of old ReplicaSets which should be retained in order to allow rollback. These old ReplicaSets consume resources in etcd and crowd the kubectl get rs output. The configuration of each Deployment revision is stored in its ReplicaSets; therefore, once an old ReplicaSet is deleted, you lose the ability to roll back to that revision of Deployment.7. Set scaleDownDelaySeconds to 30s to ensure IP table propagation across the nodes in a cluster

scaleDownDelaySeconds field to give nodes enough time to broadcast the IP table changes. If omitted, the Rollout waits 30 seconds before scaling down the previous ReplicaSet.scaleDownDelaySeconds to a minimum of 30 seconds to ensure that the IP table propagation across the nodes in a cluster. The reason is that Kubernetes waits for a specified time called the termination grace period. By default, this is 30 seconds.8. Ensure retry on both Error and TransientError

retryStrategy is an optional field of the Workflow CRD that provides controls for retrying a workflow step. One of the fields of retryStrategy is retryPolicy, which defines the policy of NodePhase statuses that will be retried (NodePhase is the condition of a node at the current time).The options for retryPolicy can be either: Always, OnError, or OnTransientError. In addition, the user can use an expression to control more of the retries.- retryPolicy=Always is too much. The user only wants to retry on system-level errors (e.g., the node dying or being preempted), but not on errors occurring in user-level code since these failures indicate a bug. In addition, this option is more suitable for long-running containers than workflows which are jobs.

- retryPolicy=OnError doesn't handle preemptions:

retryPolicy=OnErrorhandles some system-level errors like the node disappearing or the pod being deleted. However, during graceful Pod termination, thekubeletassigns aFailedstatus and aShutdownreason to the terminated Pods. As a result, node preemptions result in node status "Failure", not "Error" so preemptions aren't retried. - retryPolicy=OnError doesn't handle transient errors: classifying a preemption failure message as a transient error is allowed; however, this requires

retryPolicy=OnTransientError. (see also,TRANSIENT_ERROR_PATTERN).

retryPolicy: "Always" and using the following expression:lastRetry.status == "Error" or (lastRetry.status == "Failed" and asInt(lastRetry.exitCode) not in [0])

9. Ensure progressDeadlineAbort set to true, especially if progressDeadlineSeconds has been set

progressDeadlineSeconds which states the maximum time in seconds in which a rollout must make progress during an update before it is considered to be failed.ProgressDeadlineExceeded: The replica set has timed out progressing. To abort the rollout, the user should set both progressDeadlineSeconds and progressDeadlineAbort, with progressDeadlineAbort: true.10. Ensure custom resources match the namespace of the ArgoCD instance

Application and AppProject manifests should match the same metadata.namespace—depending on how you installed Argo CD.ClusterRoles and ClusterRoleBinding that reference the argocd namespace by default. In this case, it's recommended not only to ensure all Argo CD resources match the namespace of the Argo CD instance but also to use the argocd namespace. Otherwise, you need to update the namespace reference in all Argo CD internal resources.ClusterRole and ClusterRoleBinding. In that case, Argo creates Roles and associated RoleBindings in the namespace where Argo CD was deployed. The created service account is granted a limited level of access to manage, so for Argo CD to function as desired, access to the namespace must be explicitly granted. In this case, it's recommended to make sure all the resources, including the Application and AppProject, use the correct namespace of the ArgoCD instance.So Now What?

How Datree Works

Scan your cluster with Datree

kubectl datree test -- -n argocd

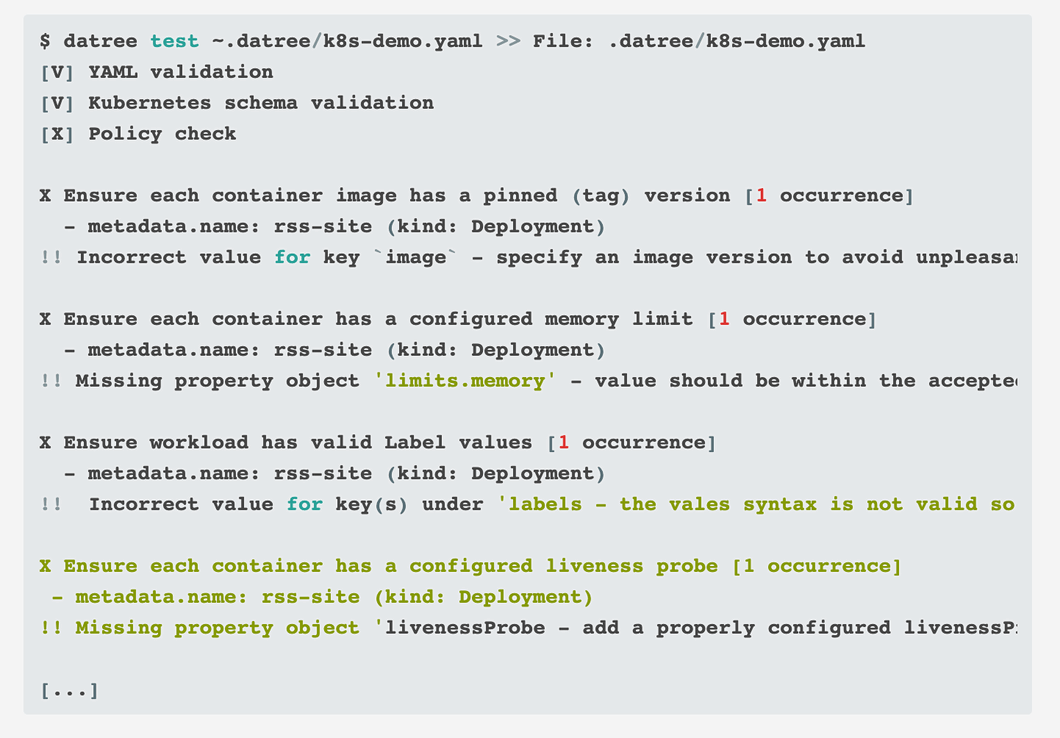

kubectl plugin to validate your resources after deployments, get ready for future version upgrades and monitor the overall compliance of your cluster.Use Datree to shift left

Scan your manifests in the CI

datree, you first need to install the CLI on your machine and then execute it with the following command:datree test <path>

- YAML validation: verifies that the file is a valid YAML file.

- Kubernetes schema validation: verifies that the file is a valid Kubernetes/Argo resource

- Policy check: verifies that the file is compliant with your Kubernetes policy (Datree built-in rules by default).