You might also like:

Understanding networking in KubernetesWhat is Container Network Interface (CNI)

How does the CNI spec look like

| Command | Description |

|---|---|

| ADD | Connects the container network namespace to the host network namespace, assigns the IP address to the container (doing the IP address management - IPAM), and sets up routes. |

| DEL | Removes the container network namespace from the host network namespace, removes the IP address from the container, and removes the routes. |

| CHECK | Checks if the container network namespace is connected to the host network namespace. |

| VERSION | Returns the version of the CNI plugin. |

kindnet plugin that's used by kind is configured:{

"cniVersion": "0.3.1",

"name": "kindnet",

"plugins": [

{

"type": "ptp",

"ipMasq": false,

// IP Address Management (IPAM) plugin - i.e. assigns IP addresses

"ipam": {

"type": "host-local",

"dataDir": "/run/cni-ipam-state",

"routes": [{ "dst": "0.0.0.0/0" }],

"ranges": [[{ "subnet": "10.244.2.0/24" }]]

},

"mtu": 1500

},

// Sets up traffic forward from one (or more) ports on the host to the container.

// Intended to be run as part of the chain

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

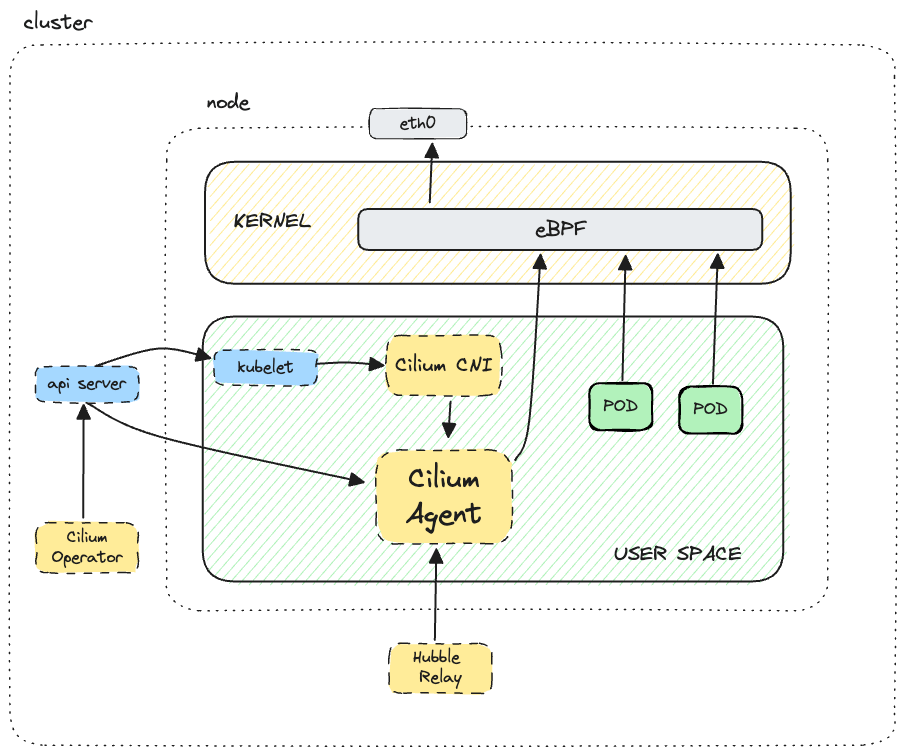

kindnet plugin is invoked, it will first invoke the ptp plugin to set up the network interface and assign the IP address to the container. Then it will invoke the portmap plugin to set up traffic forwarding from one (or more) ports on the host to the container.What is Cilium

Cilium Building Blocks - Endpoints and Identity

k8s:app=httpbin

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=default

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=httpbin

k8s:io.kubernetes.pod.namespace=default

Kubernetes Network Policy (NetworkPolicy API)

- Pod selector

- Ingress policies

- Egress policies

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: test-network-policy

namespace: default

spec:

podSelector:

matchLabels:

role: db

policyTypes:

- Ingress

- Egress

ingress:

- from:

- ipBlock:

cidr: 172.17.0.0/16

except:

- 172.17.1.0/24

- namespaceSelector:

matchLabels:

project: myproject

- podSelector:

matchLabels:

role: frontend

ports:

- protocol: TCP

port: 6379

egress:

- to:

- ipBlock:

cidr: 10.0.0.0/24

ports:

- protocol: TCP

port: 5978

default namespace with the label role=db set. It then applies the ingress and egress policies to those pods.- From the IP block

172.17.0.0/16, excluding the IP block172.17.1.0/24 - From pods in the namespace with the label

project=myproject - From pods with the label

role=frontend

Note

Note the use of-in thefromsection. This means that the traffic can come from any of the three sources - the OR semantics is used.

ports section of the ingress policy allows traffic on port 6379 using the TCP protocol. So, if any of the three sources above tries to connect to the pods selected by the pod selector, the connection will be allowed only if it’s using the TCP protocol and it’s connecting to port 6379.10.0.0.0/24 on port 5978 using the TCP protocol.What you can't do with NetworkPolicy

- You can't use L7 rules - for example, you can't use HTTP methods, headers, or paths in your policies.

- Anything TLS related

- You can't write node-specific policies

- You can't write deny policies and more

What Cilium brings to the table

- CiliumNetworkPolicy

- CiliumClusterwideNetworkPolicy

podSelector, the selector in CiliumNetworkPolicy uses the concept of Cilium endpoints, hence the name endpointSelector. Note that it still uses the matchLabels, which isn't that much different from the pod selector in the NetworkPolicy.from and to fields, there are multiple dedicated fields in the CiliumNetworkPolicy, as shown in the table below:| Ingress | Egress | Description |

|---|---|---|

| fromCIDR | toCIDR | Allows you to specify a single IP address |

| fromCIDRSet | toCIDRSet | Allows you to specify multiple IP addresses |

| fromEndpoints | toEndpoints | Allows you to specify endpoints using a label selector |

| fromRequires | toRequires | Allows you to specify basic requirements (in terms of labels) for endpoints (see explanation below) |

| fromEntities | toEntities | Allows us to describe entities allowed to connect to the endpoints. Cilium defines multiple entities such as host (includes the local host), cluster (logical group of all endpoints inside a cluster), world (all endpoints outside of the cluster), and others |

| n/a | toFQDNs | Allows you to specify fully qualified domain names (FQDNs) |

| n/a | toServices | Allows you to specify Kubernetes services either using a name or a label selector |

fromRequires allows us to set basic requirements (in terms of labels) for endpoints that are allowed to connect to the selected endpoints. The fromRequires doesn't stand alone, and it has to be combined with one of the other from fields.apiVersion: cilium.io/v2

kind: CiliumNetworkPolicy

description: "Allow traffic from endpoints in the same region and with the app label set to hello-world"

metadata:

name: requires-policy

specs:

- endpointSelector:

matchLabels:

region: us-west

ingress:

- fromRequires:

- matchLabels:

region: us-west

fromEndpoints:

- matchLabels:

app: hello-world

us-west endpoints from endpoints in the same region (us-west) and with the app label set to hello-world. If the fromRequires field is omitted, the policy will allow traffic from any endpoint with the app: hello-world label.ingressDeny and egressDeny fields (instead of ingress and egress). Note that you should check some known issues before using deny policies.Note

The limitations around the deny policies were removed in Cilium v1.14.0.

rules field inside the toPorts section. Consider the following example:apiVersion: cilium.io/v2

kind: CiliumNetworkPolicy

metadata:

name: l7-policy

spec:

endpointSelector:

matchLabels:

app: hello-world

ingress:

- toPorts:

- ports:

- port: 80

protocol: TCP

rules:

http:

- method: "GET"

path: "/api"

- method: "PUT"

path: "/version"

headers:

- "X-some-header": "hello"

app: hello-world can receive packets on port 80 using TCP, and the only methods allowed are GET on /api and PUT on /version when X-some-header is set to hello.Set up a Kind cluster with Cilium

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

nodes:

- role: control-plane

- role: worker

- role: worker

networking:

disableDefaultCNI: true

kubeProxyMode: none

config.yaml, you can create the cluster like this:kind create cluster --config=config.yaml

Note

If you'd list the nodes (kubectl get nodes) or pods (kubectl get pods -A), you'd see that the nodes are NotReady and pods are pending. That's because we disabled the default CNI, and the cluster lacks networking capabilities.

helm install cilium cilium/cilium --version 1.14.2 --namespace kube-system --set kubeProxyReplacement=strict --set k8sServiceHost=kind-control-plane --set k8sServicePort=6443 --set hubble.relay.enabled=true --set hubble.ui.enabled=true

Note

For more Helm values, check the Helm reference documentation.

cilium status

/¯¯\

/¯¯\__/¯¯\ Cilium: OK

\__/¯¯\__/ Operator: OK

/¯¯\__/¯¯\ Hubble Relay: OK

\__/¯¯\__/ ClusterMesh: disabled

\__/

Deployment hubble-ui Desired: 1, Ready: 1/1, Available: 1/1

Deployment cilium-operator Desired: 2, Ready: 2/2, Available: 2/2

Deployment hubble-relay Desired: 1, Ready: 1/1, Available: 1/1

DaemonSet cilium Desired: 3, Ready: 3/3, Available: 3/3

Containers: hubble-ui Running: 1

cilium-operator Running: 2

hubble-relay Running: 1

cilium Running: 3

Cluster Pods: 10/10 managed by Cilium

Image versions cilium-operator quay.io/cilium/operator-generic:v1.14.2@sha256:a1982c0a22297aaac3563e428c330e17668305a41865a842dec53d241c5490ab: 2

hubble-relay quay.io/cilium/hubble-relay:v1.14.2@sha256:51b772cab0724511583c3da3286439791dc67d7c35077fa30eaba3b5d555f8f4: 1

cilium quay.io/cilium/cilium:v1.14.2@sha256:85708b11d45647c35b9288e0de0706d24a5ce8a378166cadc700f756cc1a38d6: 3

hubble-ui quay.io/cilium/hubble-ui-backend:v0.11.0@sha256:14c04d11f78da5c363f88592abae8d2ecee3cbe009f443ef11df6ac5f692d839: 1

hubble-ui quay.io/cilium/hubble-ui:v0.11.0@sha256:bcb369c47cada2d4257d63d3749f7f87c91dde32e010b223597306de95d1ecc8: 1

Installing sample workloads

httpbin and sleep workloads:kubectl apply -f https://github.com/istio/istio/blob/master/samples/sleep/sleep.yaml

kubectl apply -f https://raw.githubusercontent.com/istio/istio/master/samples/httpbin/httpbin.yaml

sleep pod to the httpbin service to make sure there's connectivity between the two:kubectl exec -it deploy/sleep -- curl httpbin:8000/headers

{

"headers": {

"Accept": "*/*",

"Host": "httpbin:8000",

"User-Agent": "curl/8.1.1-DEV",

"X-Envoy-Expected-Rq-Timeout-Ms": "3600000"

}

}

Configure L3/L4 policies using NetworkPolicy resource

default namespace:apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: default-deny-ingress

spec:

podSelector: {}

policyTypes:

- Ingress

Note

The empty pod selector ({}) means that the policy applies to all pods in the namespace (in this case, thedefaultnamespace).

kubectl exec -it deploy/sleep -- curl httpbin:8000/headers

curl: (28) Failed to connect to httpbin port 8000 after 129674 ms: Couldn't connect to server

command terminated with exit code 28

Network flows with Hubble

cilium CLI first to establish a connection to the Hubble inside the cluster, and then we can use hubble observe command to look at the traces from the command line:# port forward to the Hubble relay running in the cluster

cilium hubble port-forward &

# then use hubble observe

hubble observe --to-namespace default -f

Jun 14 18:04:42.902: default/sleep-6d68f49858-8hjzc:55276 (ID:15320) <- kube-system/coredns-565d847f94-2mbrp:53 (ID:21043) to-overlay FORWARDED (UDP)

Jun 14 18:04:42.902: kube-system/coredns-565d847f94-2mbrp:53 (ID:21043) <> default/sleep-6d68f49858-8hjzc (ID:15320) pre-xlate-rev TRACED (UDP)

Jun 14 18:04:42.902: kube-system/kube-dns:53 (world) <> default/sleep-6d68f49858-8hjzc (ID:15320) post-xlate-rev TRANSLATED (UDP)

Jun 14 18:04:42.902: kube-system/coredns-565d847f94-2mbrp:53 (ID:21043) <> default/sleep-6d68f49858-8hjzc (ID:15320) pre-xlate-rev TRACED (UDP)

Jun 14 18:04:42.902: default/sleep-6d68f49858-8hjzc:55276 (ID:15320) <- kube-system/coredns-565d847f94-2mbrp:53 (ID:21043) to-endpoint FORWARDED (UDP)

Jun 14 18:04:42.902: kube-system/kube-dns:53 (world) <> default/sleep-6d68f49858-8hjzc (ID:15320) post-xlate-rev TRANSLATED (UDP)

Jun 14 18:04:42.902: default/sleep-6d68f49858-8hjzc:46279 (ID:15320) <- kube-system/coredns-565d847f94-2mbrp:53 (ID:21043) to-overlay FORWARDED (UDP)

Jun 14 18:04:42.902: default/sleep-6d68f49858-8hjzc:46279 (ID:15320) <- kube-system/coredns-565d847f94-2mbrp:53 (ID:21043) to-endpoint FORWARDED (UDP)

Jun 14 18:04:42.902: kube-system/coredns-565d847f94-2mbrp:53 (ID:21043) <> default/sleep-6d68f49858-8hjzc (ID:15320) pre-xlate-rev TRACED (UDP)

Jun 14 18:04:42.902: kube-system/kube-dns:53 (world) <> default/sleep-6d68f49858-8hjzc (ID:15320) post-xlate-rev TRANSLATED (UDP)

Jun 14 18:04:42.902: kube-system/coredns-565d847f94-2mbrp:53 (ID:21043) <> default/sleep-6d68f49858-8hjzc (ID:15320) pre-xlate-rev TRACED (UDP)

Jun 14 18:04:42.902: kube-system/kube-dns:53 (world) <> default/sleep-6d68f49858-8hjzc (ID:15320) post-xlate-rev TRANSLATED (UDP)

Jun 14 18:04:42.903: default/sleep-6d68f49858-8hjzc (ID:15320) <> default/httpbin-797587ddc5-kshhb:80 (ID:61151) post-xlate-fwd TRANSLATED (TCP)

Jun 14 18:04:42.903: default/sleep-6d68f49858-8hjzc:42068 (ID:15320) <> default/httpbin-797587ddc5-kshhb:80 (ID:61151) policy-verdict:none INGRESS DENIED (TCP Flags: SYN)

Jun 14 18:04:42.903: default/sleep-6d68f49858-8hjzc:42068 (ID:15320) <> default/httpbin-797587ddc5-kshhb:80 (ID:61151) Policy denied DROPPED (TCP Flags: SYN)

Jun 14 18:04:43.908: default/sleep-6d68f49858-8hjzc:42068 (ID:15320) <> default/httpbin-797587ddc5-kshhb:80 (ID:61151) policy-verdict:none INGRESS DENIED (TCP Flags: SYN)

Jun 14 18:04:43.908: default/sleep-6d68f49858-8hjzc:42068 (ID:15320) <> default/httpbin-797587ddc5-kshhb:80 (ID:61151) Policy denied DROPPED (TCP Flags: SYN)

...

-o flag to specify the output format as JSON and the --from-label and --to-label flags to only show the flows from sleep to httpbin endpoints:hubble observe -o json --from-label "app=sleep" --to-label "app=httpbin"

{

"flow": {

"time": "2023-06-14T18:04:43.908158337Z",

"verdict": "DROPPED",

"drop_reason": 133,

"ethernet": {

"source": "8e:b1:6e:2a:54:04",

"destination": "9a:b4:25:34:03:fc"

},

"IP": {

"source": "10.0.1.226",

"destination": "10.0.1.137",

"ipVersion": "IPv4"

},

"l4": {

"TCP": {

"source_port": 42068,

"destination_port": 80,

"flags": {

"SYN": true

}

}

},

"source": {

"ID": 2535,

"identity": 15320,

"namespace": "default",

"labels": [

"k8s:app=sleep",

"k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=default",

"k8s:io.cilium.k8s.policy.cluster=default",

"k8s:io.cilium.k8s.policy.serviceaccount=sleep",

"k8s:io.kubernetes.pod.namespace=default"

],

"pod_name": "sleep-6d68f49858-8hjzc",

"workloads": [

{

"name": "sleep",

"kind": "Deployment"

}

]

},

"destination": {

"ID": 786,

"identity": 61151,

"namespace": "default",

"labels": [

"k8s:app=httpbin",

"k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=default",

"k8s:io.cilium.k8s.policy.cluster=default",

"k8s:io.cilium.k8s.policy.serviceaccount=httpbin",

"k8s:io.kubernetes.pod.namespace=default",

"k8s:version=v1"

],

"pod_name": "httpbin-797587ddc5-kshhb",

"workloads": [

{

"name": "httpbin",

"kind": "Deployment"

}

]

},

"Type": "L3_L4",

"node_name": "kind-worker",

"event_type": {

"type": 1,

"sub_type": 133

},

"traffic_direction": "INGRESS",

"drop_reason_desc": "POLICY_DENIED",

"Summary": "TCP Flags: SYN"

},

"node_name": "kind-worker",

"time": "2023-06-14T18:04:43.908158337Z"

}

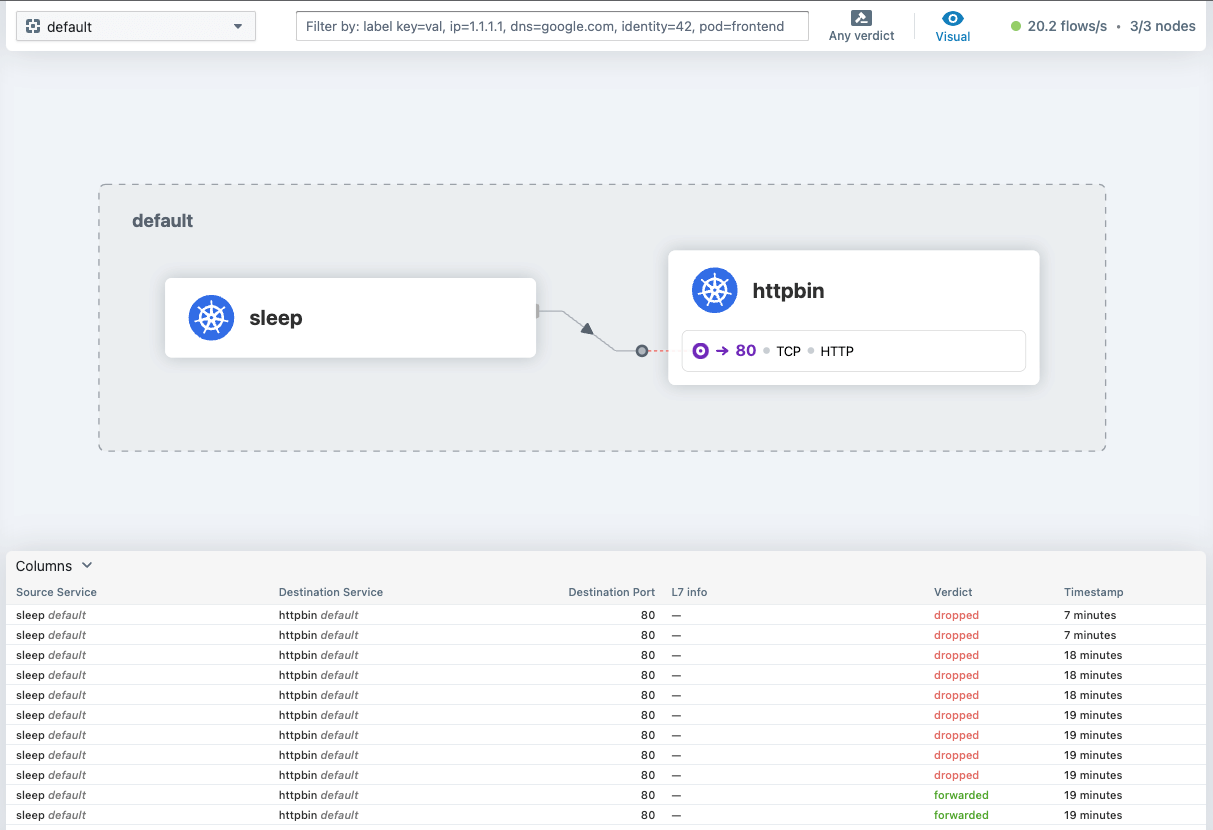

cilium hubble ui

Ingress policy to allow traffic to httpbin

sleep pod to the httpbin pod:apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: allow-ingress-to-httpbin

namespace: default

spec:

podSelector:

matchLabels:

app: httpbin

policyTypes:

- Ingress

ingress:

- from:

- podSelector:

matchLabels:

app: sleep

sleep to httpbin. If you apply the policy and then try to access httpbin from sleep again, it will work.Using namespace selector in ingress policy

sleep) and deploy a sleep workload:kubectl create ns sleep

kubectl apply -f https://github.com/istio/istio/blob/master/samples/sleep/sleep.yaml -n sleep

httpbin from sleep in the sleep namespace, it will fail. This is because the policy we created only allows traffic from sleep in the default namespace to httpbin in the default namespace.sleep in the sleep namespace to httpbin in the default namespace using the namespace selector:apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: allow-ingress-from-sleep

namespace: default

spec:

podSelector:

matchLabels:

app: httpbin

policyTypes:

- Ingress

ingress:

- from:

- namespaceSelector:

matchLabels:

kubernetes.io/metadata.name: sleep

sleep namespace, we'll be able to access the httpbin workload in the default namespace:kubectl exec -it deploy/sleep -n sleep -- curl httpbin.default:8000/headers

sleep namespace to access the httpbin workload.namespaceSelector with the podSelector and make the policy more restrictive. The below policy now only allows the sleep pods (pods with app: sleep label) from the sleep namespace to access the httpbin pods:apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: allow-ingress-from-sleep

spec:

podSelector:

matchLabels:

app: httpbin

policyTypes:

- Ingress

ingress:

- from:

- namespaceSelector:

matchLabels:

kubernetes.io/metadata.name: sleep

podSelector:

matchLabels:

app: sleep

sleep pods, but it will restrict any other pods from making them. Before continuing, let's delete the policies we have created so far using the command below:kubectl delete netpol --all

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: allow-ns-ingress

spec:

podSelector: {}

ingress:

- from:

- podSelector: {}

Egress policy to deny egress traffic from sleep

sleep pods:kind: NetworkPolicy

apiVersion: networking.k8s.io/v1

metadata:

name: deny-sleep-egress

spec:

podSelector:

matchLabels:

app: sleep

policyTypes:

- Egress

sleep pod to httpbin, it will fail because the policy denies any egress traffic from the sleep pods.kubectl exec -it deploy/sleep -- curl httpbin.default:8000/headers

curl: (6) Could not resolve host: httpbin.default

command terminated with exit code 6

httpbin.default couldn't be resolved. This specific error is because the sleep pod is trying to resolve the hostname httpbin.default to an IP address, but since all requests going out of the sleep pod are denied, the DNS request is also being blocked.httpbin.default), use the IP address of the httpbin service. You'll get an error saying that the connection failed:curl: (28) Failed to connect to httpbin port 8000 after 129674 ms: Couldn't connect to server

command terminated with exit code 28

kube-dns running in the kube-system namespace like this:kind: NetworkPolicy

apiVersion: networking.k8s.io/v1

metadata:

name: allow-dns-egress

spec:

podSelector: {}

policyTypes:

- Egress

egress:

- to:

- namespaceSelector:

matchLabels:

kubernetes.io/metadata.name: kube-system

podSelector:

matchLabels:

k8s-app: kube-dns

httpbin using the following policy:kind: NetworkPolicy

apiVersion: networking.k8s.io/v1

metadata:

name: allow-sleep-to-httpbin-egress

spec:

podSelector:

matchLabels:

app: sleep

policyTypes:

- Egress

egress:

- to:

- podSelector:

matchLabels:

app: httpbin

sleep pod to httpbin. Let's clean up the policies again (kubectl delete netpol --all), before we look at the L7 policies.L7 ingress policies using CiliumNetworkPolicy

httpbin and sleep workloads, but let's say we want to allow traffic to the httpbin endpoint on the /headers path and only using the GET method. We know we can't use NetworkPolicy for that, but we can use CiliumNetworkPolicy.POST request and a request to /ip path just ensure everything works fine (make sure we don't have any left-over policies from before):kubectl exec -it deploy/sleep -n default -- curl -X POST httpbin.default:8000/post

kubectl exec -it deploy/sleep -n default -- curl httpbin.default:8000/ip

{

"args": {},

"data": "",

"files": {},

"form": {},

"headers": {

"Accept": "*/*",

"Host": "httpbin.default:8000",

"User-Agent": "curl/8.1.1-DEV"

},

"json": null,

"origin": "10.0.1.226",

"url": "http://httpbin.default:8000/post"

}

{

"origin": "10.0.1.226"

}

/headers path and only using the GET method - this means after we apply the policy the POST request or the request to /ip path will fail:apiVersion: cilium.io/v2

kind: CiliumNetworkPolicy

metadata:

name: allow-get-headers

spec:

endpointSelector:

matchLabels:

app: httpbin

ingress:

- fromEndpoints:

- matchLabels:

app: sleep

toPorts:

- ports:

# Note port 80 here! We aren't talking about the service port, but the "endpoint" port, which is 80.

- port: "80"

protocol: TCP

rules:

http:

- method: GET

path: /headers

/ip path fails as well:Access denied

Access denied

kubectl exec -it deploy/sleep -n default -- curl httpbin.default:8000/headers

{

"headers": {

"Accept": "*/*",

"Host": "httpbin.default:8000",

"User-Agent": "curl/8.1.1-DEV",

"X-Envoy-Expected-Rq-Timeout-Ms": "3600000"

}

}

X-Envoy-Expected-Rq-Timeout-Ms - this is set by the Envoy proxy that is spun up inside the Cilium agent pod and handles the L7 policies.Frequently asked questions

1. What is a Kubernetes NetworkPolicy?

2. What is Cilium and how does it relate to NetworkPolicy?

3. What is Hubble and how does it work with Cilium?

4. What does a NetworkPolicy look like in Kubernetes?

podSelectors, policyTypes, and ingress/egress rules. It tells Kubernetes which pods the policy applies to and what the inbound and outbound traffic rules are.5. What is the purpose of Ingress and Egress policies in Kubernetes?

6. Can I apply more than one NetworkPolicy to a pod?

7. What is CiliumNetworkPolicy?

8. How can I write deny policies?

ingressDeny/egressDeny fields.